kohonenSOM_trainer

Trains a Kohonen network.

Synopsis

#include <imsls.h>

Imsls_f_kohonenSOM *imsls_f_kohonenSOM_trainer (float fcn(), float lcn(), int dim, int nrow, int ncol, int nobs, float data[], …, 0)

The type double function is imsls_d_kohonenSOM_trainer.

Required Arguments

float fcn (int nrow, int ncol, int total_iter, int t, float d) (Input/Output)

User-supplied neighborhood function. In the simplest form, the neighborhood function h(d, t) is 1 for all nodes closest to the BMU and 0 for others, but a Gaussian function is also commonly used. For example:

Arguments

int nrow (Input)

The number of rows in the node grid.

int ncol (Input)

The number of columns in the node grid.

int total_iter (Input)

The number of iterations for training.

int t (Input)

The current iteration of the training.

float d (Input)

The lattice distance between the best matching node and the current node.

Return Value

The computed neighborhood function value.

float lcn (int nrow, int ncol, int total_iter, int t) (Input/Output)

User supplied learning coefficient function. The monotonically decreasing learning coefficient function α(t) is a scalar factor that defines the size of the update correction. The value of α(t) decreases with the step index t. Typical forms are linear, power, and inverse time/step. For example:

power:

where t=t, T=total_iter, α0 = initial learning coefficient, αT = final learning coefficient

inverse time:

where A and B are user determined constants

Arguments

int nrow (Input)

The number of rows in the node grid.

int ncol (Input)

The number of columns in the node grid.

int total_iter (Input)

The number of iterations for training.

int t (Input)

The current iteration of the training.

Return Value

The computed learning coefficient.

int dim (Input)

The number of weights for each node in the node grid. dim must be greater than zero.

int nrow (Input)

The number of rows in the node grid. nrow must be greater than zero.

int ncol (Input)

The number of columns in the node grid. ncol must be greater than zero.

int nobs (Input)

The number of observations in data. nobs must be greater than zero.

float data[] (Input)

An nobs × dim array containing the data to be used for training the Kohonen network.

Return Value

A pointer to a Imsls_f_kohonenSOM data structure containing the trained Kohonen network. This space can be released by using the imsls_free function. Please see Data Structures for a description of this data structure.

Synopsis with Optional Arguments

#include <imsls.h>

Imsls_f_kohonenSOM imsls_f_kohonenSOM_trainer (float fcn(), float lcn(), int dim, int nrow, int ncol, int nobs, float data[],

IMSLS_RECTANGULAR, or

IMSLS_HEXAGONAL,

IMSLS_VON_NEUMANN, or

IMSLS_MOORE,

IMSLS_WRAP_AROUND,

IMSLS_RANDOM_SEED, int seed,

IMSLS_ITERATIONS, int total_iter,

IMSLS_INITIAL_WEIGHTS, float weights[],

IMSL_FCN_W_DATA, float fcn(), void *data,

IMSL_LCN_W_DATA, float lcn(), void *data,

IMSL_RECONSTRUCTION_ERROR, float *error,

0)

Optional Arguments

IMSLS_RECTANGULAR, (Input)

Specifies a rectangular grid should be used. Optional Arguments IMSLS_RECTANGULAR and IMSLS_HEXAGONAL are mutually exclusive.

|

Argument |

Action |

|

IMSLS_RECTANGULAR |

Use a rectangular grid. |

|

IMSLS_HEXAGONAL |

Use a hexagonal grid. |

Default: A rectangular grid is used.

or

IMSLS_HEXAGONAL

Specifies a hexagonal grid should be used. Optional Arguments IMSLS_RECTANGULAR and IMSLS_HEXAGONAL are mutually exclusive.

|

Argument |

Action |

|

IMSLS_RECTANGULAR |

Use a rectangular grid. |

|

IMSLS_HEXAGONAL |

Use a hexagonal grid. |

Default: A rectangular grid is used.

IMSLS_VON_NEUMANN, (Input)

Use the Von Neumann neighborhood type. Optional Arguments IMSLS_VON_NEUMAN and IMSLS_MOORE are mutually exclusive.

|

Argument |

Action |

|

IMSLS_VON_NEUMANN |

Use the Von Neumann neighborhood type. |

|

IMSLS_MOORE |

Use the Moore neighborhood type. |

Default: The Von Neumann neighborhood type is used.

or

IMSLS_MOORE

Use the Moore neighborhood type. Optional Arguments IMSLS_VON_NEUMAN and IMSLS_MOORE are mutually exclusive.

|

Argument |

Action |

|

IMSLS_VON_NEUMANN |

Use the Von Neumann neighborhood type. |

|

IMSLS_MOORE |

Use the Moore neighborhood type. |

Default: The Von Neumann neighborhood type is used.

IMSLS_WRAP_AROUND, (Input)

Wrap around the opposite edges. A hexagonal grid must have an even number of rows to wrap around.

Default: Do not wrap around the opposite edges.

IMSLS_RANDOM_SEED, int seed (Input)

The seed of the random number generator used in generating the initial weights. If seed is 0, a value is computed using the system clock; hence, the results may be different between different calls with the same input.

Default: seed = 0.

IMSLS_ITERATIONS, int total_iter (Input)

The number of iterations to be used for training.

Default: total_iter = 100.

IMSLS_INITIAL_WEIGHTS, float weights[] (Input)

The initial weights of the nodes.

Default: Initial weights are generated internally using random uniform number generator.

IMSL_FCN_W_DATA, float fcn (int nrow, int ncol, int total_iter, int t, float d, void *data), void *data (Input)

float fcn (int nrow, int ncol, int total_iter, int t, float d, void *data) (Input)

User supplied neighborhood function, which also accepts a pointer to data that is supplied by the user. data is a pointer to the data to be passed to the user-supplied function.

Arguments

int nrow (Input)

The number of rows in the node grid.

int ncol (Input)

The number of columns in the node grid.

int total_iter (Input)

The number of iterations for training.

int t (Input)

The current iteration of the training.

float d (Input)

The lattice distance between the best matching node and the current node.

void *data (Input)

A pointer to the data to be passed to the user-supplied function.

Return Value

The computed neighborhood function value.

void *data (Input)

A pointer to the data to be passed to the user-supplied function.

IMSL_LCN_W_DATA, float lcn (int nrow, int ncol, int total_iter, int t, void *data), void *data (Input)

float lcn (int nrow, int ncol, int total_iter, int t, void *data) (Input)

User supplied learning coefficient function, which also accepts a pointer to data that is supplied by the user. data is a pointer to the data to be passed to the user-supplied function.

Arguments

int nrow (Input)

The number of rows in the node grid.

int ncol (Input)

The number of columns in the node grid.

int total_iter (Input)

The number of iterations for training.

int t (Input)

The current iteration of the training.

void *data (Input)

A pointer to the data to be passed to the user-supplied function.

Return Value

The computed learning coefficient.

void *data (Input)

A pointer to the data to be passed to the user-supplied function.

IMSLS_RECONSTRUCTION_ERROR, float *error (Output)

The sum of the Euclidean distance between the input, data, and the nodes in the trained Kohonen network.

Description

A self-organizing map (SOM), also known as a Kohonen map or Kohonen SOM, is a technique for gathering high-dimensional data into clusters that are constrained to lie in low dimensional space, usually two dimensions. A Kohonen map is a widely used technique for the purpose of feature extraction and visualization for very high dimensional data in situations where classifications are not known beforehand. The Kohonen SOM is equivalent to an artificial neural network having inputs linked to every node in the network. Self-organizing maps use a neighborhood function to preserve the topological properties of the input space.

In a Kohonen map, nodes are arranged in a rectangular or hexagonal grid or lattice. The input is connected to each node, and the output of the Kohonen map is the zero-based (i, j) index of the node that is closest to the input. A Kohonen map involves two steps: training and forecasting. Training builds the map using input examples (vectors), and forecasting classifies a new input.

During training, an input vector is fed to the network. The input's Euclidean distance from all the nodes is calculated. The node with the shortest distance is identified and is called the Best Matching Unit, or BMU. After identifying the BMU, the weights of the BMU and the nodes closest to it in the SOM lattice are updated towards the input vector. The magnitude of the update decreases with time and with distance (within the lattice) from the BMU. The weights of the nodes surrounding the BMU are updated according to:

Wt+1=Wt+α(t) ∗ h(d,t) ∗ (Dt-Wt)

where Wt represents the node weights, α(t) is the monotonically decreasing learning coefficient function, h(d,t) is the neighborhood function, d is the lattice distance between the node and the BMU, and Dt is the input vector.

The monotonically decreasing learning coefficient function α(t) is a scalar factor that defines the size of the update correction. The value of α(t) decreases with the step index t.

The neighborhood function h(d,t) depends on the lattice distance d between the node and the BMU, and represents the strength of the coupling between the node and BMU. In the simplest form, the value of h(d,t) is 1 for all nodes closest to the BMU and 0 for others, but a Gaussian function is also commonly used. Regardless of the functional form, the neighborhood function shrinks with time (Hollmén, 15.2.1996). Early on, when the neighborhood is broad, the self-organizing takes place on the global scale. When the neighborhood has shrunk to just a couple of nodes, the weights converge to local estimates.

Note that in a rectangular grid, the BMU has four closest nodes for the Von Neumann neighborhood type, or eight closest nodes for the Moore neighborhood type. In a hexagonal grid, the BMU has six closest nodes.

During training, this process is repeated for a number of iterations on all input vectors.

During forecasting, the node with the shortest Euclidean distance is the winning node, and its (i, j) index is the output.

Data Structures

|

Field |

Description |

|

int grid |

0 = rectangular grid. Otherwise, hexagonal grid. |

|

int type |

0 = Von Neumann neighborhood type. Otherwise, Moore neighborhood type. |

|

int wrap |

0 = do not wrap-around node edges. |

|

int dim |

Number of weights in each node. |

|

int nrow |

Number of rows in the node grid. |

|

int ncol |

Number of columns in the node grid. |

|

float *weights |

Array of length nrow x ncol x dim containing the weights of the nodes. |

Example

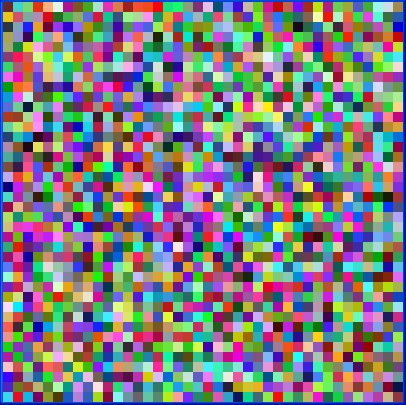

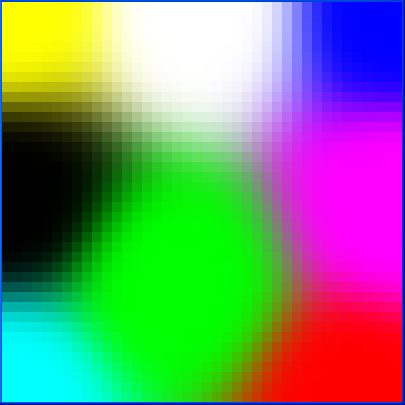

This example creates a Kohonen network with 40 × 40 nodes. Each node has three weights, representing the RGB values of a color. This network is trained with eight colors using 500 iterations. Then, the example prints out a forecast result. Initially, the image of the nodes is:

After the training, the image is:

#include <stdio.h>

#include <math.h>

#include <imsls.h>

float fcn(int nrow, int ncol, int total_iter, int t, float d);

float lcn(int nrow, int ncol, int total_iter, int t);

int main() {

Imsls_f_kohonenSOM *kohonen=NULL;

int dim=3, nrow=40, ncol=40, nobs=8;

float data[8][3] = {

{1.0, 0.0, 0.0},

{0.0, 1.0, 0.0},

{0.0, 0.0, 1.0},

{1.0, 1.0, 0.0},

{1.0, 0.0, 1.0},

{0.0, 1.0, 1.0},

{0.0, 0.0, 0.0},

{1.0, 1.0, 1.0}

};

int *forecasts = NULL;

float fdata[1][3] = {

{0.25, 0.5, 0.75}

};

float error;

kohonen = imsls_f_kohonenSOM_trainer(fcn, lcn, dim, nrow, ncol,

nobs, &data[0][0],

IMSLS_RANDOM_SEED, 123457,

IMSLS_ITERATIONS, 500,

IMSLS_RECONSTRUCTION_ERROR, &error,

0);

forecasts =

imsls_f_kohonenSOM_forecast(kohonen, 1, &fdata[0][0], 0);

printf("The output node is at (%d, %d).\n",

forecasts[0], forecasts[1]);

printf("Reconstruction error is %f.\n", error);

/* Free up memory. */

imsls_free(kohonen->weights);

imsls_free(kohonen);

imsls_free(forecasts);

}

float fcn(int nrow, int ncol, int total_iter, int t, float d) {

float factor, c;

int max;

max = nrow > ncol ? nrow : ncol;

/* A Gaussian function. */

factor = max / 4.0;

c = (float) (total_iter - t) / ((float) total_iter / factor);

return exp(-(d * d) / (2.0 * c * c));

}

float lcn(int nrow, int ncol, int total_iter, int t) {

float initialLearning = 0.07;

return initialLearning * exp(-(float) t / (float) total_iter);

}

Output

The output node is at (25, 11).

Reconstruction error is 13589.462891.