This example uses a network previously trained and serialized into four files to obtain information about the network and forecasts. Training was done using the code for the FeedForwardNetwork Example 1.

The network training targets were generated using the relationship:

y = 10*X1 + 20*X2 + 30*X3 + 2.0*X4, where

X1 to X3 are the three binary columns, corresponding to categories 1 to 3 of the nominal attribute, and X4 is the scaled continuous attribute.

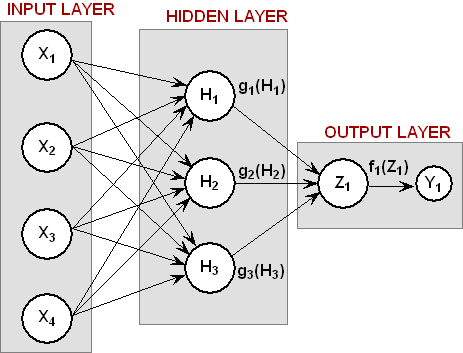

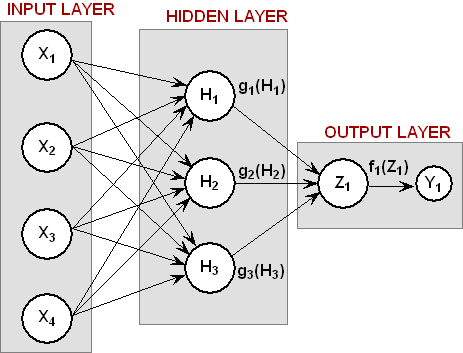

The structure of the network consists of four input nodes and two layers, with three perceptrons in the hidden layer and one in the output layer. The following figure illustrates this structure:

All perceptrons were trained using a Linear Activation Function. Forecasts are generated for 9 conditions, corresponding to the following conditions:

Nominal Class 1-3 with the Continuous Input Attribute = 0

Nominal Class 1-3 with the Continuous Input Attribute = 5.0

Nominal Class 1-3 with the Continuous Input Attribute = 10.0

Note that the network training statistics retrieved from the serialized network confirm that this is the same network used in the previous example. Obtaining these statistics requires retrieval of the training patterns which were serialized and stored into separate files. This information is not serialized with the network, nor with the trainer.

import com.imsl.datamining.neural.*;

import java.io.*;

//***************************************************************************

// Two Layer Feed-Forward Network with 4 inputs: 1 categorical with 3 classes

// encoded using binary encoding and 1 continuous input, and 1 output

// target (continuous). There is a perfect linear relationship between

// the input and output variables:

//

// MODEL: Y = 10*X1 + 20*X2 + 30*X3 + 2*X4

//

// Variables X1-X3 are the binary encoded nominal variable and X4 is the

// continuous variable.

//

// This example uses Linear Activation in both the hidden and output layers

// The network uses a 2-layer configuration, one hidden layer and one

// output layer. The hidden layer consists of 3 perceptrons. The output

// layer consists of a single output perceptron.

// The input from the continuous variable is scaled to [0,1] before training

// the network. Training is done using the Quasi-Newton Trainer.

// The network has a total of 19 weights.

// Since the network target is a linear combination of the network inputs,

// and since all perceptrons use linear activation, the network is able to

// forecast the every training target exactly. The largest residual is

// 2.78E-08.

//***************************************************************************

public class NetworkEx1 implements Serializable {

// **********************************************************************

// MAIN

// **********************************************************************

public static void main(String[] args) throws Exception {

double xData[][]; // Input Attributes for Training Patterns

double yData[][]; // Output Attributes for Training Patterns

double weight[]; // network weights

double gradient[];// network gradient after training

// Input Attributes for Forecasting

double x[][] = {

{1, 0, 0, 0.0}, {0, 1, 0, 0.0}, {0, 0, 1, 0.0},

{1, 0, 0, 5.0}, {0, 1, 0, 5.0}, {0, 0, 1, 5.0},

{1, 0, 0, 10.0}, {0, 1, 0, 10.0}, {0, 0, 1, 10.0}

};

double xTemp[], y[];// Temporary areas for storing forecasts

int i, j; // loop counters

// Names of Serialized Files

String networkFileName = "FeedForwardNetworkEx1.ser"; // the network

String trainerFileName = "FeedForwardTrainerEx1.ser"; // the trainer

String xDataFileName = "FeedForwardxDataEx1.ser"; // xData

String yDataFileName = "FeedForwardyDataEx1.ser"; // yData

// ******************************************************************

// READ THE TRAINED NETWORK FROM THE SERIALIZED NETWORK OBJECT

// ******************************************************************

System.out.println("--> Reading Trained Network from "

+ networkFileName);

Network network = (Network) read(networkFileName);

// ******************************************************************

// READ THE SERIALIZED XDATA[][] AND YDATA[][] ARRAYS OF TRAINING

// PATTERNS.

// ******************************************************************

System.out.println("--> Reading xData from "

+ xDataFileName);

xData = (double[][]) read(xDataFileName);

System.out.println("--> Reading yData from "

+ yDataFileName);

yData = (double[][]) read(yDataFileName);

// ******************************************************************

// READ THE SERIALIZED TRAINER OBJECT

// ******************************************************************

System.out.println("--> Reading Network Trainer from "

+ trainerFileName);

Trainer trainer = (Trainer) read(trainerFileName);

// ******************************************************************

// DISPLAY TRAINING STATISTICS

// ******************************************************************

double stats[] = network.computeStatistics(xData, yData);

// Display Network Errors

System.out.println("***********************************************");

System.out.println("--> SSE: "

+ (float) stats[0]);

System.out.println("--> RMS: "

+ (float) stats[1]);

System.out.println("--> Laplacian Error: "

+ (float) stats[2]);

System.out.println("--> Scaled Laplacian Error: "

+ (float) stats[3]);

System.out.println("--> Largest Absolute Residual: "

+ (float) stats[4]);

System.out.println("***********************************************");

System.out.println("");

// ******************************************************************

// OBTAIN AND DISPLAY NETWORK WEIGHTS AND GRADIENTS

// ******************************************************************

System.out.println("--> Getting Network Information");

// Get weights

weight = network.getWeights();

// Get number of weights = number of gradients

int nWeights = network.getNumberOfWeights();

// Obtain Gradient Vector

gradient = trainer.getErrorGradient();

// Print Network Weights and Gradients

System.out.println(" ");

System.out.println("--> Network Weights and Gradients:");

for (i = 0; i < nWeights; i++) {

System.out.println("w[" + i + "]=" + (float) weight[i]

+ " g[" + i + "]=" + (float) gradient[i]);

}

// ******************************************************************

// OBTAIN AND DISPLAY FORECASTS FOR THE LAST 10 TRAINING TARGETS

// ******************************************************************

// Get number of network inputs

int nInputs = network.getNumberOfInputs();

// Get number of network outputs

int nOutputs = network.getNumberOfOutputs();

xTemp = new double[nInputs]; // temporary x space for forecast inputs

y = new double[nOutputs];// temporary y space for forecast output

System.out.println(" ");

// Obtain example forecasts for input attributes = x[]

// X1-X3 are binary encoded for one nominal variable with 3 classes

// X4 is a continuous input attribute ranging from 0-10. During

// training, X4 was scaled to [0,1] by dividing by 10.

for (i = 0; i < 9; i++) {

for (j = 0; j < nInputs; j++) {

xTemp[j] = x[i][j];

}

xTemp[nInputs - 1] = xTemp[nInputs - 1] / 10.0;

y = network.forecast(xTemp);

System.out.print("--> X1=" + (int) x[i][0]

+ " X2=" + (int) x[i][1] + " X3=" + (int) x[i][2]

+ " | X4=" + x[i][3]);

System.out.println(" | y="

+ (float) (10.0 * x[i][0] + 20.0 * x[i][1]

+ 30.0 * x[i][2] + 2.0 * x[i][3])

+ "| Forecast=" + (float) y[0]);

}

}

// **********************************************************************

// READ SERIALIZED NETWORK FROM A FILE

// **********************************************************************

static public Object read(String filename)

throws IOException, ClassNotFoundException {

FileInputStream fis = new FileInputStream(filename);

ObjectInputStream ois = new ObjectInputStream(fis);

Object obj = ois.readObject();

ois.close();

fis.close();

return obj;

}

}

--> Reading Trained Network from FeedForwardNetworkEx1.ser --> Reading xData from FeedForwardxDataEx1.ser --> Reading yData from FeedForwardyDataEx1.ser --> Reading Network Trainer from FeedForwardTrainerEx1.ser *********************************************** --> SSE: 1.0134443E-15 --> RMS: 2.0074636E-19 --> Laplacian Error: 3.0058038E-7 --> Scaled Laplacian Error: 3.5352343E-10 --> Largest Absolute Residual: 2.784276E-8 *********************************************** --> Getting Network Information --> Network Weights and Gradients: w[0]=-1.4917853 g[0]=-2.6110852E-8 w[1]=-1.4917853 g[1]=-2.6110852E-8 w[2]=-1.4917853 g[2]=-2.6110852E-8 w[3]=1.6169184 g[3]=6.182032E-8 w[4]=1.6169184 g[4]=6.182032E-8 w[5]=1.6169184 g[5]=6.182032E-8 w[6]=4.725622 g[6]=-5.273859E-8 w[7]=4.725622 g[7]=-5.273859E-8 w[8]=4.725622 g[8]=-5.273859E-8 w[9]=6.217407 g[9]=-8.7338103E-10 w[10]=6.217407 g[10]=-8.7338103E-10 w[11]=6.217407 g[11]=-8.7338103E-10 w[12]=1.0722584 g[12]=-1.6909877E-7 w[13]=1.0722584 g[13]=-1.6909877E-7 w[14]=1.0722584 g[14]=-1.6909877E-7 w[15]=3.8507552 g[15]=-1.7029118E-8 w[16]=3.8507552 g[16]=-1.7029118E-8 w[17]=3.8507552 g[17]=-1.7029118E-8 w[18]=2.4117248 g[18]=-1.5881545E-8 --> X1=1 X2=0 X3=0 | X4=0.0 | y=10.0| Forecast=10.0 --> X1=0 X2=1 X3=0 | X4=0.0 | y=20.0| Forecast=20.0 --> X1=0 X2=0 X3=1 | X4=0.0 | y=30.0| Forecast=30.0 --> X1=1 X2=0 X3=0 | X4=5.0 | y=20.0| Forecast=20.0 --> X1=0 X2=1 X3=0 | X4=5.0 | y=30.0| Forecast=30.0 --> X1=0 X2=0 X3=1 | X4=5.0 | y=40.0| Forecast=40.0 --> X1=1 X2=0 X3=0 | X4=10.0 | y=30.0| Forecast=30.0 --> X1=0 X2=1 X3=0 | X4=10.0 | y=40.0| Forecast=40.0 --> X1=0 X2=0 X3=1 | X4=10.0 | y=50.0| Forecast=50.0Link to Java source.