Example 3: Accumulation Of A Component

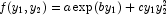

This example uses the same data as in the One-Sided Differences example. An alternate examination of the function  shows that the first term on the right-hand side need be evaluated just when computing the first partial. The additive term

shows that the first term on the right-hand side need be evaluated just when computing the first partial. The additive term  occurs when computing the partial with respect to

occurs when computing the partial with respect to  . Also the first term does not depend on the second variable. Thus the first term can be left out of the function evaluation when computing the partial with respect to

. Also the first term does not depend on the second variable. Thus the first term can be left out of the function evaluation when computing the partial with respect to  , potentially avoiding cancellation errors. The input values of array

, potentially avoiding cancellation errors. The input values of array options

allow NumericalDerivatives

to use these facts and obtain greater accuracy using a minimum number of computations of the exponential function.

using System;

using Imsl.Math;

public class NumericalDerivativesEx3 : NumericalDerivatives.IJacobian

{

static int m = 1, n = 2;

static double a, b, c;

public double[] F(int varIndex, double[] y)

{

double[] tmp = new double[m];

if (varIndex != 2)

{

tmp[0] = a * Math.Exp(b * y[0]);

}

else

{

// This is the function value for the partial wrt y_2.

tmp[0] = c * y[0] * y[1] * y[1];

}

return tmp;

}

public double[,] Jacobian(double[] y)

{

double[,] tmp = new double[m, n];

// Start with part of the derivative that is known.

tmp[0, 0] = c * y[1] * y[1];

return tmp;

}

public static void Main(String[] args)

{

NumericalDerivatives.DifferencingMethod[] options =

new NumericalDerivatives.DifferencingMethod[n];

double u;

double[] y = new double[n];

double[] valueF = new double[m];

double[] scale = new double[n];

double[,] actual = new double[m, n];

double[] re = new double[2];

// Define data and point of evaluation:

a = 2.5e6;

b = 3.4e0;

c = 4.5e0;

y[0] = 2.1e0;

y[1] = 3.2e0;

// Precision, for measuring errors

u = Math.Sqrt(2.220446049250313e-016);

// Set scaling:

scale[0] = 1.0e0;

// Increase scale to account for large value of a.

scale[1] = 8.0e3;

// Compute true values of partials.

actual[0, 0] = a * b * Math.Exp(b * y[0]) + c * y[1] * y[1];

actual[0, 1] = 2 * c * y[0] * y[1];

options[0] = NumericalDerivatives.DifferencingMethod.Accumulate;

options[1] = NumericalDerivatives.DifferencingMethod.OneSided;

valueF[0] = a * Math.Exp(b * y[0]);

scale[1] = 1.0e0;

NumericalDerivatives derv =

new NumericalDerivatives(new NumericalDerivativesEx3());

derv.SetDifferencingMethods(options);

derv.SetScalingFactors(scale);

derv.SetInitialF(valueF);

double[,] jacobian = derv.EvaluateJ(y);

PrintMatrixFormat pmf = new PrintMatrixFormat();

pmf.NumberFormat = "0.00";

new PrintMatrix("Numerical gradient:").Print(pmf, jacobian);

new PrintMatrix("Analytic gradient:").Print(pmf, actual);

jacobian[0, 0] = (jacobian[0, 0] - actual[0, 0]) / actual[0, 0];

jacobian[0, 1] = (jacobian[0, 1] - actual[0, 1]) / actual[0, 1];

re[0] = jacobian[0, 0];

re[1] = jacobian[0, 1];

Console.Out.WriteLine("Relative accuracy:");

Console.Out.WriteLine("df/dy_1 df/dy_2");

Console.Out.WriteLine(" {0:F2}u {1:F2}u",

re[0]/u, re[1]/u);

Console.Out.WriteLine("({0:#.###e+00}) ({1:#.###e+00})",

re[0], re[1]);

}

}

Output

Numerical gradient:

0 1

0 10722141710.08 60.48

Analytic gradient:

0 1

0 10722141353.42 60.48

Relative accuracy:

df/dy_1 df/dy_2

2.23u -0.51u

(3.326e-08) (-7.569e-09)

Link to C# source.