mlffPatternClassification¶

Calculates classifications for trained multilayered feedforward neural networks.

Synopsis¶

mlffPatternClassification (network, nominal, continuous)

Required Arguments¶

- Imsls_d_NN_Network

network(Input) - A Imsls_d_NN_Network containing the trained feedforward network. See mlffNetwork.

- int

nominal[[]](Input) - Array of size

nPatternsbynNominalcontaining the nominal input variables. - float

continuous[[]](Input) - Array of size

nPatternsbynContinuouscontaining the continuous and scaled ordinal input variables.

Return Value¶

An array of size nPatterns by nClasses containing the predicted class

probabilities associated with each input pattern, where nClasses is the

number of possible target classifications. nClasses =

network.n_outputs for non-binary classification categories. For binary

classification, nClasses = 2.

Optional Arguments¶

logisticTable, (Input)- This option specifies that all logistic activation functions are calculated using the table lookup approximation. This is only needed when a network is trained with this option and Stage II training is bypassed. If Stage II training was not bypassed during network training, weights were based upon the optimum network from Stage II which never uses a table lookup approximation to calculate logistic activations.

predictedClass(Output)- An array of size

nPatternscontaining the predicted classification for each pattern.

Description¶

Function mlffPatternClassification calculates classification

probabilities from a previously trained multilayered feedforward neural

network using the same network structure and scaling applied during the

training. The structure Imsls_d_NN_Network describes the network structure

used to originally train the network. The weights, which are the key output

from training, are used as input to this function. The weights are stored in

the Imsls_d_NN_Network structure.

In addition, two two-dimensional arrays are used to describe the values of

the nominal and continuous attributes that are to be used as network inputs

for calculating classification probabilities. Optionally, it can also return

the predicted classifications in predictedClass. The predicted

classification is the target class with the highest probability,

classProb.

Function mlffPatternClassification returns classification probabilities

for the network input patterns.

Pattern Classification Attributes¶

Neural network classification inputs consist of the following types of attributes:

- nominal input attributes, and

- continuous attributes, including ordinal attributes encoded to cumulative percentages.

The first data type contains the encoding of any nominal input attributes. If binary encoding is used, this encoding consists of creating columns of zeros and ones for each class value associated with every nominal attribute. The function unsupervisedNominalFilter can be used for this encoding.

When only one nominal attribute is used for input, then the number of binary encoded columns is equal to the number of classes for that attribute. If more nominal attributes appear in the data, then each nominal attribute is associated with several columns, one for each of its classes. Each column consists of zeros and ones. The column value is zero if that classification is not associated with this pattern; otherwise, it is equal to one if it is assigned to this pattern.

Consider an example with one nominal variable and two classes: male and female and the following five patterns: male, male, female, male, female. With binary encoding, the following 5 by 2 matrix is sent to the pattern classification to request classification probabilities for these patterns:

The second category of input attributes corresponds to continuous

attributes. They are passed to this classification function via the floating

point array continuous. The number of rows in this matrix is

nPatterns, and the number of columns is nContinuous, corresponding

to the number of continuous input attributes.

Ordinal input attributes, if used, are typically encoded to cumulative

percentages. Since these are floating point values, they are placed into a

column of the continuous array and nContinuous is set equal to the

number of columns in this array.

In some cases, one of these types of input attributes may not exist. In that

case, either nNominal = 0 or nContinuous = 0 and their corresponding

input matrix is ignored.

Network Configuration¶

The configuration of the network consists of a description of the number of

perceptrons for each layer, the number of hidden layers, the number of

inputs and outputs, and a description of the linkages among the perceptrons.

This description is passed into this training routine through the structure

Imsls_d_NN_Network. See mlffNetwork. For binary

problems there is only a single output since the probability

P(class = 0) is equal to 1-P(class =

1). For other classification problems, however, nOutputs = nClasses

and P(class = j) is equal to the classification

probabilities in the j + 1 column of classProb[].

Classification Probabilities¶

Classification probabilities are calculated from the input attributes,

network structure and weights provided in network.

Classification probabilities are returned in a two-dimensional array,

classProb, with nPatterns rows and nClasses columns. The values in

the i-th column are estimated probabilities for the class = (i-1).

Examples¶

Example 1¶

Fisher’s (1936) Iris data is often used for benchmarking discriminant analysis and classification solutions. It is part of the IMSL data sets and consists of the following continuous input attributes and classification target:

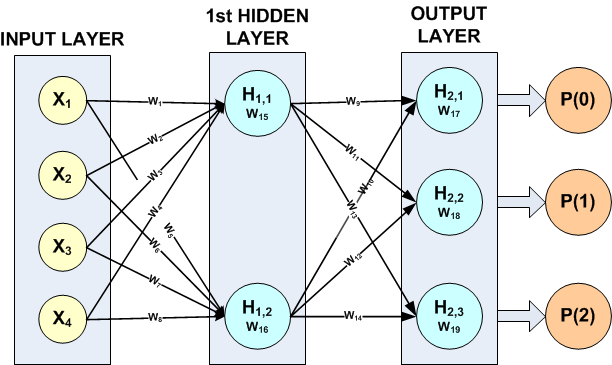

Continuous Attributes – \(X_1\)(sepal length), \(X_2\)(sepal width), \(X_3\)(petal length), and \(X_4\)(petal width)

Classification Target (Iris Type) – Setosa, Versicolour or Virginica.

The input attributes were scaled to z-scores using scaleFilter. The hidden layer contained only 2 perceptrons and the output layer consisted of three perceptrons, one for each classification target.

Example 2 for mlffClassificationTrainer

used the following network structure for the 150 patterns in these data:

Figure 13.17 — A 2-layer, Classification Network with 4 Inputs 5 Perceptrons and a Target Classification with 3 Classes

mlffClassificationTrainer found the following 19 weights for this

network:

W1 = -0.109866 W2 = -0.0534655 W3 = 4.92944 W4 = -2.04734

W5 = 10.2339 W6 = -1495.09 W7 = 3336.49 W8 = 7372.98

W9 = -9143.53 W10 = 48.8937 W11 = 240.958 W12 = -3386.21

W13 = 8904.6 W14 = 3339.1 W15 = 0.874638 W16 = -7978.42

W17 = 4586.22 W18 = 1931.89 W19 = -6518.14

The association of these weights with the calculation of the potentials for each perceptron is described in the following table:

| PERCEPTRON | POTENTIAL | ACTIVATION |

|---|---|---|

| \(H_{1,1}\) | \(W_{15}\) + \(X_1W_1\) + \(X_2W_2\) + \(X_3W_3\) + \(X_4W_4\) | LOGISTIC |

| \(H_{1,2}\) | \(W_{16}\) + \(X_1W_5\) + \(X_2W_6\) + \(X_3W_7\) + \(X_4W_8\) | LOGISTIC |

| \(H_{2,1}\) | \(W_{17}\) + \(H_{1,1}W_9\) + \(H_{1,2}W_{10}\) | SOFTMAX |

| \(H_{2,2}\) | \(W_{18}\) + \(H_{1,1}W_{11}\) + \(H_{1,2}W_{12}\) | SOFTMAX |

| \(H_{2,3}\) | \(W_{19}\) + \(H_{1,1}W_{13}\) + \(H_{1,2}W_{14}\) | SOFTMAX |

The potential calculations for each perceptron are activated using the assigned activation function. In this example, default activations were used, e.g. logistic for \(H_{1,1}\) and \(H_{1,2}\) and softmax for the output perceptrons \(H_{2,1}\), \(H_{2,2}\) and \(H_{2,3}\).

Note that in this case the network weights were retrieved from a file named

iris_classfication.txt using mlffNetworkRead.

This retrieves the trained network from mlffClassificationTrainer

described in Example 2. These were passed

directly to mlffPatternClassification in the Imsls_d_NN_Network

structure.

from __future__ import print_function

from numpy import *

from pyimsl.stat.dataSets import dataSets

from pyimsl.stat.scaleFilter import scaleFilter

from pyimsl.stat.mlffNetworkRead import mlffNetworkRead

from pyimsl.stat.mlffPatternClassification import mlffPatternClassification

# Three Layer Feed-Forward Network with 4 inputs, all

# continuous, and 3 classification categories.

#

# This is perhaps the best known database to be found in the pattern

# recognition literature. Fisher's paper is a classic in the

# field. The data set contains 3 classes of 50 instances each,

# where each class refers to a type of iris plant. One class is

# linearly separable from the other 2; the latter are NOT linearly

# separable from each other.

#

# Predicted attribute: class of iris plant.

# 1=Iris Setosa, 2=Iris Versicolour, and 3=Iris Virginica

#

# Input Attributes (4 Continuous Attributes)

# X1: Sepal length,

# X2: Sepal width,

# X3: Petal length,

# and X4: Petal width

n_patterns = 150

n_inputs = 4 # four inputs, all continuous

n_nominal = 0 # no nominal input attributes

n_continuous = 4 # one continuous input attribute

n_outputs = 3 # total number of output perceptrons

predicted_class = []

classification = empty(150, dtype=int)

unscaledX = empty(150)

scaledX = empty(150)

contAtt = empty([150, 4])

dmean = empty(4)

s = empty(4)

colLabels = ["Pattern", "Class=0", "Class=1", "Class=2"]

filename = "iris_classification.txt"

prtLabel = "\nPredicted_Class | P(0) P(1) P(2)"

dashes = "-----------------------------------------------"

print("******************************************************")

print(" IRIS CLASSIFICATION EXAMPLE - PATTERN CLASSIFICATION ")

print("******************************************************")

irisData = dataSets(3)

# Setup the continuous attribute input array, contAtt[], and the

# network target classification array, classification[], using

# the above raw data matrix.

for i in range(0, n_patterns):

classification[i] = irisData[i, 0] - 1

for j in range(1, 5):

contAtt[i, j - 1] = irisData[i, j]

# Scale continuous input attributes using z-score method

center_spread = {}

for j in range(0, n_continuous):

for i in range(0, n_patterns):

unscaledX[i] = contAtt[i, j]

scaledX = scaleFilter(unscaledX, 2, returnCenterSpread=center_spread)

for i in range(0, n_patterns):

contAtt[i, j] = scaledX[i]

dmean[j] = center_spread['center']

s[j] = center_spread['spread']

print("Scale Parameters:")

for j in range(0, n_continuous):

print("Var %d Mean = %f S = %f" % (j + 1, dmean[j], s[j]))

network = mlffNetworkRead(filename, t_print=True)

# Use pattern classification routine to classify training

# patterns using trained network.

classProb = mlffPatternClassification(network, None, contAtt,

predictedClass=predicted_class)

# Print class predictions

print(prtLabel)

print(dashes)

for i in range(0, n_patterns):

print(" %d " % predicted_class[i], end=' ')

print(" | %f %f %f" %

(classProb[i][0],

classProb[i][1],

classProb[i][2]))

if (i == 49) | (i == 99):

print(prtLabel)

print(dashes)

Output¶

The output for this example reproduces the 100% classification accuracy

found during network training. For details, see

Example 2 of mlffClassificationTrainer.

******************************************************

IRIS CLASSIFICATION EXAMPLE - PATTERN CLASSIFICATION

******************************************************

Scale Parameters:

Var 1 Mean = 5.843333 S = 0.828066

Var 2 Mean = 3.057333 S = 0.435866

Var 3 Mean = 3.758000 S = 1.765298

Var 4 Mean = 1.199333 S = 0.762238

Predicted_Class | P(0) P(1) P(2)

-----------------------------------------------

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

0 | 1.000000 0.000000 0.000000

Predicted_Class | P(0) P(1) P(2)

-----------------------------------------------

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

1 | 0.000000 1.000000 0.000000

Predicted_Class | P(0) P(1) P(2)

-----------------------------------------------

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

2 | 0.000000 0.000000 1.000000

Attempting to open iris_classification.txt for

reading network data structure

File iris_classification.txt Successfully Opened

File iris_classification.txt closed

Example 2¶

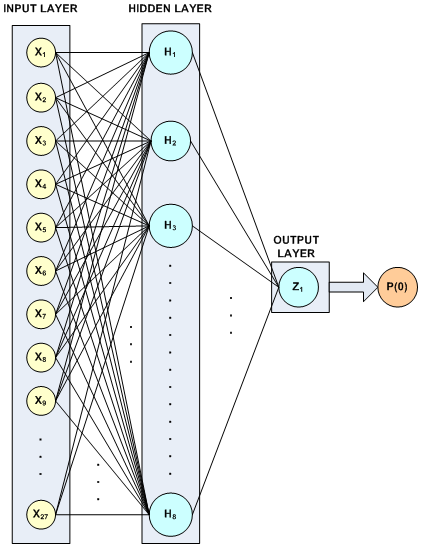

Pattern classification is often used for pattern recognition, including playing simple games such as tic-tac-toe. The University of California at Irvine maintains a repository of data mining data, http://kdd.ics.uci.edu/. One consists of 958 patterns for board positions in tic-tac-toe donated by David Aha. See http://archive.ics.uci.edu/ml/datasets/Tic-Tac-Toe+Endgame for access to the actual data.

Each of the 958 patterns is described by nine nominal input attributes and one classification target. The nine nominal input attributes are the nine board positions in the game. Each has three classifications: X occupies the position, O occupies the position and vacant.

The target class is binary. A value of one indicates that the X player has one of eight possible wins in the next move. A value of zero indicates that this player does not have a winning position. 65.3% of the 958 patterns have a class = 1.

The nine nominal input attributes are mapped into 27 binary encoded columns, three for each of the nominal attributes. This makes a total of 27 input columns for the network. In this example, a neural network with one hidden layer containing ten perceptrons was found to provide 100% classification accuracy. This configuration is illustrated in the following figure.

Figure 13.18 — A 2-layer, Binary Classification Network for Playing Tic-Tac-Toe

All hidden layer perceptrons used the default activation, logistic, and

since the classification target is binary only one perceptron with logistic

activation is used to calculate the probability of a loss for X, i.e.,

\(P(class 0)\). All logistic activations are calculated using the

logisticTable option, which can reduce Stage I training time. Since

Stage II training is bypassed, this option must also be used with the

mlffPatternClassification routine. This is the only time this option is

used. If Stage II training was part of the network training, the final

network weights would have been calculated without using the logistic table

to approximate the calculations.

This structure results in a network with \(27\times 8+8+9=233\) weights. It is surprising that with this small a number of weights relative to the number of training patterns, the trained network achieves 100% classification accuracy.

Unlike Example 1 in which the network was trained previously and retrieved

using mlffNetworkRead, this example first trains

the network and then passes the network structure network into

mlffPatternClassification.

from __future__ import print_function

from numpy import *

from pyimsl.stat.dataSets import dataSets

from pyimsl.stat.unsupervisedNominalFilter import unsupervisedNominalFilter

from pyimsl.stat.mlffNetworkInit import mlffNetworkInit

from pyimsl.stat.mlffNetwork import mlffNetwork

from pyimsl.stat.randomSeedSet import randomSeedSet

from pyimsl.stat.mlffClassificationTrainer import mlffClassificationTrainer, EQUAL

from pyimsl.stat.mlffPatternClassification import mlffPatternClassification

n_cat = 9 # 9 nominal input attributes

n_categorical = 27 # 9 Encoded = 27 categorical inputs

n_classes = 2 # positive or negative

n_patterns = []

n_var = []

trainStats = []

predictedClass = []

classification = empty(958, dtype=int)

# get tic tac toe data

inputData = dataSets(10,

nObservations=n_patterns,

nVariables=n_var)

n_patterns = n_patterns[0]

n_var = n_var[0]

print("*******************************************************")

print("* TIC-TAC-TOE BINARY CLASSIFICATION EXAMPLE *")

print("*******************************************************")

# populate categorical Att from catAtt using binary encoding

categoricalAtt = empty([958, n_categorical], dtype=int)

nomTempIn = empty(n_patterns, dtype=int)

m = 0

for i in range(0, n_cat):

for j in range(0, n_patterns):

nomTempIn[j] = inputData[j, i] + 1

nClass = []

nomTempOut = unsupervisedNominalFilter(nClass, nomTempIn)

for k in range(0, nClass[0]):

for j in range(0, n_patterns):

categoricalAtt[j, k + m] = nomTempOut[j, k]

m = m + nClass[0]

# Setup the classification array, classification[]

for i in range(0, n_patterns):

classification[i] = inputData[i, n_var - 1]

network = mlffNetworkInit(27, 1)

mlffNetwork(network, createHiddenLayer=8, linkAll=True)

randomSeedSet(5555)

# Train Classification Network

trainStats = mlffClassificationTrainer(network, classification, categoricalAtt, None,

stageI={'nEpochs': 30,

'epochSize': n_patterns},

noStageII=True,

logisticTable=True,

weightInitializationMethod=EQUAL)

# Use pattern classification routine to classify training patterns

# using trained network. This will reproduce the results returned

# in predicted_class[]

classProb = mlffPatternClassification(network, categoricalAtt, None,

logisticTable=True,

predictedClass=predictedClass)

# Print Classification Predictions

print("*******************************************************")

print("Classification Minimum Cross-Entropy Error: %f" % trainStats[0])

print("Classification Error Rate: %f" % trainStats[5])

print("*******************************************************")

print("\nPRINTING FIRST TEN PREDICTIONS FOR EACH TARGET CLASS")

print("*******************************************************")

print(" |TARGET|PREDICTED| | *")

print("PATTERN |CLASS | CLASS | P(class=0) | P(class=1) *")

print("*******************************************************")

for k in range(0, 2):

for i in range(k * 627, k * 627 + 10):

print(" %d\t| %d | %d | " %

(i + 1, classification[i], predictedClass[i]), end=' ')

print("%f | %f" % (classProb[i, 0], classProb[i, 1]))

print()

k = 0

for i in range(0, n_patterns):

if classification[i] != predictedClass[i]:

k = k + 1

if k == 0:

print("All %d predicted classifications agree" % n_patterns, end=' ')

print("with target classifications")

Output¶

The output for this example demonstrates how mlffPatternClassification

reproduces the 100% classification accuracy found during network training.

*******************************************************

* TIC-TAC-TOE BINARY CLASSIFICATION EXAMPLE *

*******************************************************

*******************************************************

Classification Minimum Cross-Entropy Error: 0.000000

Classification Error Rate: 0.000000

*******************************************************

PRINTING FIRST TEN PREDICTIONS FOR EACH TARGET CLASS

*******************************************************

|TARGET|PREDICTED| | *

PATTERN |CLASS | CLASS | P(class=0) | P(class=1) *

*******************************************************

1 | 1 | 1 | 0.000000 | 1.000000

2 | 1 | 1 | 0.000000 | 1.000000

3 | 1 | 1 | 0.000000 | 1.000000

4 | 1 | 1 | 0.000000 | 1.000000

5 | 1 | 1 | 0.000000 | 1.000000

6 | 1 | 1 | 0.000000 | 1.000000

7 | 1 | 1 | 0.000000 | 1.000000

8 | 1 | 1 | 0.000000 | 1.000000

9 | 1 | 1 | 0.000000 | 1.000000

10 | 1 | 1 | 0.000000 | 1.000000

628 | 0 | 0 | 1.000000 | 0.000000

629 | 0 | 0 | 1.000000 | 0.000000

630 | 0 | 0 | 1.000000 | 0.000000

631 | 0 | 0 | 1.000000 | 0.000000

632 | 0 | 0 | 1.000000 | 0.000000

633 | 0 | 0 | 1.000000 | 0.000000

634 | 0 | 0 | 1.000000 | 0.000000

635 | 0 | 0 | 1.000000 | 0.000000

636 | 0 | 0 | 1.000000 | 0.000000

637 | 0 | 0 | 1.000000 | 0.000000

All 958 predicted classifications agree with target classifications