mlff_network

Creates a multilayered feedforward neural network.

Synopsis

#include <imsls.h>

void imsls_f_mlff_network (Imsls_f_NN_Network *network, ..., 0)

The type double functions is imsls_d_mlff_network.

Required Arguments

Imsls_f_NN_Network *network (Input/Output)

A pointer to the structure containing the neural network that was initialized by imsls_f_mlff_network_init. On output, the data structure will be updated depending on the optional arguments used.

A pointer to the structure containing the neural network that was initialized by imsls_f_mlff_network_init. On output, the data structure will be updated depending on the optional arguments used.

Synopsis with Optional Arguments

#include <imsls.h>

void imsls_f_mlff_network (Imsls_f_NN_Network *network,

IMSLS_CREATE_HIDDEN_LAYER, int n_perceptrons,

IMSLS_ACTIVATION_FCN, int layer_id, int activation_fcn[],

IMSLS_BIAS, int layer_id, float bias[],

IMSLS_LINK_ALL, or

IMSLS_LINK_LAYER, int to, int from, or

IMSLS_LINK_NODE, int to, int from, or

IMSLS_REMOVE_LINK, int to, int from,

IMSLS_N_LINKS, int *n_links,

IMSLS_DISPLAY_NETWORK,

0)

Optional Arguments for imsls_f_mlff_network

IMSLS_CREATE_HIDDEN_LAYER, int n_perceptrons (Input)

Creates a hidden layer with n_perceptrons. To create one or more hidden layers imsls_f_mlff_network must be called multiple times with optional argument IMSLS_CREATE_HIDDEN_LAYER.

Creates a hidden layer with n_perceptrons. To create one or more hidden layers imsls_f_mlff_network must be called multiple times with optional argument IMSLS_CREATE_HIDDEN_LAYER.

Default: No hidden layer is created.

IMSLS_ACTIVATION_FCN, int layer_id, int activation_fcn[] (Input)

Specifies the activation function for each perceptron in a hidden layer or the output layer, indicated by layer_id. layer_id must be between 1 and the number of layers. If a hidden layer has been created, layer_id set to 1 will indicate the first hidden layer. If there are zero hidden layers, layer_id set to 1 indicates the output layer. Argument activation_fcn is an array of length n_perceptrons in layer_id, where n_perceptrons is the number of perceptrons in layer_id. activation_fcn contains the activation function for the i-th perceptron. Valid values for activation_fcn are:

Specifies the activation function for each perceptron in a hidden layer or the output layer, indicated by layer_id. layer_id must be between 1 and the number of layers. If a hidden layer has been created, layer_id set to 1 will indicate the first hidden layer. If there are zero hidden layers, layer_id set to 1 indicates the output layer. Argument activation_fcn is an array of length n_perceptrons in layer_id, where n_perceptrons is the number of perceptrons in layer_id. activation_fcn contains the activation function for the i-th perceptron. Valid values for activation_fcn are:

Activation Function | Description |

|---|---|

IMSLS_LINEAR | Linear |

IMSLS_LOGISTIC | Logistic |

IMSLS_TANH | Hyperbolic-tangent |

IMSLS_SQUASH | Squash |

Default: Output Layer activation_fcn[i] = IMSLS_LINEAR. All hidden layers activation_fcn[i] = IMSLS_LOGISTIC.

IMSLS_BIAS, int layer_id, float bias[], (Input)

Specifies the bias values for each perceptron in a hidden layer or the output layer, indicated by layer_id. layer_id must be between 1 and the number of layers. If a hidden layer has been created, layer_id set to 1 indicates the first hidden layer. If there are zero hidden layers, layer_id set to 1 indicates the output layer. Argument bias is an array of length n_perceptrons in layer_id, where n_perceptrons is the number of perceptrons in layer_id. bias contains the initial bias values for the i-th perceptron.

Specifies the bias values for each perceptron in a hidden layer or the output layer, indicated by layer_id. layer_id must be between 1 and the number of layers. If a hidden layer has been created, layer_id set to 1 indicates the first hidden layer. If there are zero hidden layers, layer_id set to 1 indicates the output layer. Argument bias is an array of length n_perceptrons in layer_id, where n_perceptrons is the number of perceptrons in layer_id. bias contains the initial bias values for the i-th perceptron.

Default: bias[i] = 0.0

IMSLS_LINK_ALL, (Input)

Connects all nodes in a layer to each node in the next layer, for all layers in the network. To create a valid network, use IMSLS_LINK_ALL, IMSLS_LINK_LAYER, or IMSLS_LINK_NODE.

Connects all nodes in a layer to each node in the next layer, for all layers in the network. To create a valid network, use IMSLS_LINK_ALL, IMSLS_LINK_LAYER, or IMSLS_LINK_NODE.

or

IMSLS_LINK_LAYER, int to, int from (Input)

Creates a link between all nodes in layer from to all nodes in layer to. Layers are numbered starting at zero with the input layer, then the hidden layers in the order they are created, and finally the output layer. To create a valid network, use IMSLS_LINK_ALL, IMSLS_LINK_LAYER, or IMSLS_LINK_NODE.

Creates a link between all nodes in layer from to all nodes in layer to. Layers are numbered starting at zero with the input layer, then the hidden layers in the order they are created, and finally the output layer. To create a valid network, use IMSLS_LINK_ALL, IMSLS_LINK_LAYER, or IMSLS_LINK_NODE.

or

IMSLS_LINK_NODE, int to, int from (Input)

Links node from to node to. Nodes are numbered starting at zero with the input nodes, then the hidden layer perceptrons, and finally the output perceptrons. To create a valid network, use IMSLS_LINK_ALL, IMSLS_LINK_LAYER, or IMSLS_LINK_NODE.

Links node from to node to. Nodes are numbered starting at zero with the input nodes, then the hidden layer perceptrons, and finally the output perceptrons. To create a valid network, use IMSLS_LINK_ALL, IMSLS_LINK_LAYER, or IMSLS_LINK_NODE.

or

IMSLS_REMOVE_LINK, int to, int from (Input)

Removes the link between node from and node to. Nodes are numbered starting at zero with the input nodes, then the hidden layer perceptrons, and finally output perceptrons.

Removes the link between node from and node to. Nodes are numbered starting at zero with the input nodes, then the hidden layer perceptrons, and finally output perceptrons.

IMSLS_N_LINKS, int *n_links (Output)

Returns the number of links in the network.

Returns the number of links in the network.

IMSLS_DISPLAY_NETWORK (Input)

Displays the contents of the network structure.

Displays the contents of the network structure.

Default: No printing is done.

Description

A multilayered feedforward network contains an input layer, an output layer and zero or more hidden layers. The input and output layers are created by the function imsls_f_mlff_network_init. The hidden layers are created by one or more calls to imsls_f_mlff_network with the keyword IMSLS_CREATE_HIDDEN_LAYER, where n_perceptrons specifies the number of perceptrons in the hidden layer.

The network also contains links or connections between nodes. Links are created by using one of the three optional arguments in the imsls_f_mlff_network function, IMSLS_LINK_ALL, IMSLS_LINK_LAYER, IMSLS_LINK_NODE. The most useful is the IMSLS_LINK_ALL, which connects every node in each layer to every node in the next layer. A feedforward network is a network in which links are only allowed from one layer to a following layer.

Each link has a weight and gradient value. Each perceptron node has a bias value. When the network is trained, the weight and bias values are used as initial guesses. After the network is trained using imsls_f_mlff_network_trainer, the weight, gradient and bias values are updated in the Imsls_f_NN_Network structure.

Each perceptron has an activation function g, and a bias, μ. The value of the percepton is given by g(Z), where g is the activation function and Z is the potential calculated using

where xi are the values of nodes input to this perceptron with weights wi.

All information for the network is stored in the structure called Imsls_f_NN_Network. (If the type is double, then the structure name is Imsls_d_NN_Network.) This structure describes the network that is trained by imsls_f_mlff_network_trainer.

The following code gives a detailed description of Imsls_f_NN_Network:

typedef struct

{

int n_inputs;

int n_outputs;

int n_layers;

Imsls_NN_Layer *layers;

int n_links;

int next_link;

Imsls_f_NN_Link *links;

int n_nodes;

Imsls_f_NN_Node *nodes;

} Imsls_f_NN_Network;

where Imsls_NN_Layer is:

typedef struct

{

int n_nodes;

int *nodes; /* An array containing the indices into the

Node array that belong to this layer */

} Imsls_NN_Layer;

Imsls_NN_Link is:

typedef struct

{

float weight;

float gradient;

int to_node; /* index of to node */

int from_node; /* index of from node */

} Imsls_f_NN_Link;

and, Imsls_NN_Node is:

typedef struct

{

int layer_id;

int n_inLinks;

int n_outLinks;

int *inLinks; /* index to Links array */

int *outLinks; /* index to Links array */

float gradient;

float bias;

int ActivationFcn;

} Imsls_f_NN_Node;

In particular, if network is a pointer to the structure of type Imsls_f_NN_Network , then:

Structure member | Description |

|---|---|

network->n_layers | Number of layers in network. Layers are numbered starting at 0 for the input layer. |

network->n_nodes | Total number of nodes in network, including the input attributes. |

network->n_links | Total number of links or connections between input attributes and perceptrons and between perceptrons from layer to layer. |

network->layers[0] | Input layer with n_inputs attributes. |

network->layers[network->n_layers-1] | Output layer with n_outputs perceptrons. |

network->n_inputs which is equal to network->layers[0].n_nodes | n_inputs (number of input attributes). |

network->n_outputs which is equal to network->layers[network->n_layers-1].n_nodes | n_outputs (number of output perceptrons). |

network->layers[1].n_nodes | Number of perceptrons in first hidden layer, or number of output perceptrons if no hidden layer. |

network->links[i].weight | Initial weight for the i-th link in network. After the training has completed the structure member contains the weight used for forecasting. |

network->nodes[i].bias | Initial bias value for the i-th node. After the training has completed the bias value is updated. |

Nodes are numbered starting at zero with the input nodes, followed by the hidden layer perceptrons and finally the output perceptrons.

Layers are numbered starting at zero with the input layer, followed by the hidden layers and finally the output layer. If there are no hidden layers, the output layer is numbered one.

Links are numbered starting at zero in the order the links were created. If the IMSLS_LINK_ALL option was used, the first link is the input link from the first input node to the first node in the next layer. The second link is the input link from the first input node to the second node in the next layer, continuing to the link from the last node in the next to last layer to the last node in the output layer. However, due to the possible variations in the order the links may be created, it is advised to initialize the weights using the imsls_f_initialize_weights routine or use the optional argument IMSLS_WEIGHT_INITIALIZATION_METHOD in functions imsls_f_mlff_network_trainer and imsls_f_mlff_classification_trainer. Alternatively, the weights can be initialized in the Imsls_f_NN_Network data structure. The following code is an example of how to initialize the network weights in an Imsls_f_NN_Network variable created with the name network:

for (j=network->n_inputs; j < network->n_nodes; j++)

{

for (k=0; k < network->nodes[j].n_inLinks; k++)

{

wIdx = network->nodes[j].inLinks[k];

/* set specific layer weights */

if (network->nodes[j].layer_id == 1) {

network->links[wIdx].weight = 0.5;

} else if (network->nodes[j].layer_id == 2) {

network->links[wIdx].weight = 0.33;

} else {

network->links[wIdx].weight = 0.25;

}

}

}

The first for loop, j iterates through each perceptron in the network. Since input nodes are not perceptrons, they are excluded. The second for loop, k iterates through each of the perceptron’s input links, network->nodes[j].inLinks[k]. network‑>nodes[j].n_inLinks is the number of input links for network‑>nodes[j]. network‑>nodes[j].inLinks[k] contains the index of each input link to network‑>nodes[j] in the network‑>links array.

This example also illustrates how to set the weights based on the layer_id number. network‑>nodes[j].layer_id contains the layer identification number. This is used to set the weights for the first hidden layer to 0.5, the second hidden layer weights to 0.33 and all others to 0.25.

Examples

Example 1

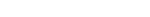

This example creates a single-layer feedforward network. The network inputs are directly connected to the output perceptrons using the IMSLS_LINK_ALL argument. The output perceptrons use the default linear activation function and default bias values of 0.0. The IMSLS_DISPLAY_NETWORK argument is used to show the default settings of the network.

Figure 30, A Single-Layer Feedforward Neural Net

#include <imsls.h>

int main()

{

Imsls_f_NN_Network *network;

network = imsls_f_mlff_network_init(3,2);

imsls_f_mlff_network(network,

IMSLS_LINK_ALL,

IMSLS_DISPLAY_NETWORK,

0);

imsls_f_mlff_network_free(network);

}

Output

+++++++++++

Input Layer

-----------------

NODE_0

Activation Fcn = 0

Bias = 0.000000

Output Links : 0 1

NODE_1

Activation Fcn = 0

Bias = 0.000000

Output Links : 2 3

NODE_2

Activation Fcn = 0

Bias = 0.000000

Output Links : 4 5

Output Layer

-----------------

NODE_3

Activation Fcn = 0

Bias = 0.000000

Input Links : 0 2 4

NODE_4

Activation Fcn = 0

Bias = 0.000000

Input Links : 1 3 5

******* Links ********

network->links[0].weight = 0.00000000000000000000

network->links[0].gradient = 1.00000000000000000000

network->links[0].to_node = 3

network->links[0].from_node = 0

network->links[1].weight = 0.00000000000000000000

network->links[1].gradient = 1.00000000000000000000

network->links[1].to_node = 4

network->links[1].from_node = 0

network->links[2].weight = 0.00000000000000000000

network->links[2].gradient = 1.00000000000000000000

network->links[2].to_node = 3

network->links[2].from_node = 1

network->links[3].weight = 0.00000000000000000000

network->links[3].gradient = 1.00000000000000000000

network->links[3].to_node = 4

network->links[3].from_node = 1

network->links[4].weight = 0.00000000000000000000

network->links[4].gradient = 1.00000000000000000000

network->links[4].to_node = 3

network->links[4].from_node = 2

network->links[5].weight = 0.00000000000000000000

network->links[5].gradient = 1.00000000000000000000

network->links[5].to_node = 4

network->links[5].from_node = 2

Example 2

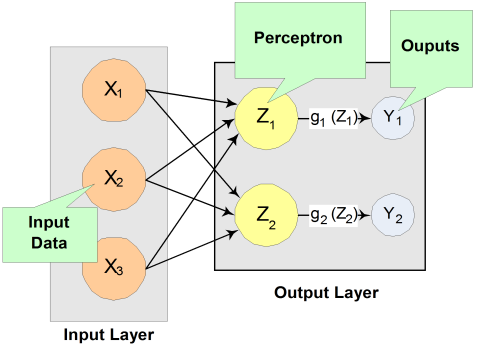

This example creates a two-layer feedforward network with four inputs, one hidden layer with three perceptrons and two outputs.

Since the default activation function is linear for output and logistic for the hidden layers, to create a network that uses only linear activation you must specify the linear activation for each hidden layer in the network. This example demonstrates how to change the activation function and bias values for hidden and output layer perceptrons as shown in Figure 31 below.

Figure 31, A 2-layer Feedforward Network with 4 Inputs and 2 Outputs

#include <imsls.h>

int main()

{

Imsls_f_NN_Network *network;

int hidActFcn[3] ={IMSLS_LINEAR, IMSLS_LINEAR, IMSLS_LINEAR};

float outbias[2] = {1.0, 1.0};

float hidbias[3] = {1.0, 1.0, 1.0};

network = imsls_f_mlff_network_init(4,2);

imsls_f_mlff_network(network,

IMSLS_CREATE_HIDDEN_LAYER, 3,

IMSLS_ACTIVATION_FCN, 1, &hidActFcn,

IMSLS_BIAS, 2, outbias,

IMSLS_LINK_ALL,

0);

imsls_f_mlff_network(network,

IMSLS_BIAS, 1, hidbias,

0);

imsls_f_mlff_network_free(network);

}

Example 3

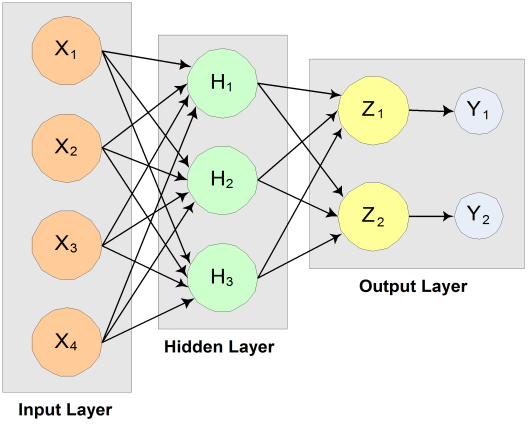

This example creates a three-layer feedforward network with six input nodes and they are not all connected to every node in the first hidden layer.

Note also that the four perceptrons in the first hidden layer are not connected to every node in the second hidden layer, and the perceptrons in the second hidden layer are not all connected to the two outputs:

Figure 32, A network that uses a total of nine perceptrons to produce two forecasts from six input attributes

This network uses a total of nine perceptrons to produce two forecasts from six input attributes.

Links among the input nodes and perceptrons can be created using one of several approaches. If all inputs are connected to every perceptron in the first hidden layer, and if all perceptrons are connected to every perceptron in the following layer, which is a standard architecture for feed forward networks, then a call to the IMSLS_LINK_ALL method can be used to create these links.

However, this example does not use that standard configuration. Some links are missing. The keyword IMSLS_LINK_NODE can be used to construct individual links, or, an alternative approach is to first create all links and then remove those that are not needed. This example illustrates the latter approach.

#include <imsls.h>

int main()

{

Imsls_f_NN_Network *network;

network = imsls_f_mlff_network_init(6,2);

/* Create 2 hidden layers and link all nodes */

imsls_f_mlff_network(network, IMSLS_CREATE_HIDDEN_LAYER, 4, 0);

imsls_f_mlff_network(network, IMSLS_CREATE_HIDDEN_LAYER, 3,

IMSLS_LINK_ALL, 0);

/* Remove unwanted links from Input 1 */

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,8,0, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,9,0, 0);

/* Remove unwanted links from Input 2 */

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,9,1, 0);

/* Remove unwanted links from Input 3 */

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,6,2, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,9,2, 0);

/* Remove unwanted links from Input 4 */

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,6,3, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,7,3, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,8,3, 0);

/* Remove unwanted links from Input 5 */

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,6,4, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,7,4, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,8,4, 0);

/* Remove unwanted links from Input 6 */

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,6,5, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,7,5, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,8,5, 0);

/* Add link from Input 1 to Output Perceptron 1 */

imsls_f_mlff_network(network, IMSLS_LINK_NODE,13,0, 0);

/* Remove unwanted links between hidden Layer 1 and hidden layer 2 */

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,11,8, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,12,9, 0);

/* Remove unwanted links between hidden Layer 2 and output layer */

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,14,10, 0);

imsls_f_mlff_network_free(network);

}

Another approach is to use keywords IMSLS_LINK_NODE and IMSLS_LINK_LAYER to combine links between the two hidden layers, create individual links, and remove the links that are not needed. This example illustrates this approach:

#include <imsls.h>

int main()

{

Imsls_f_NN_Network *network;

network = imsls_f_mlff_network_init(6,2);

imsls_f_mlff_network(network, IMSLS_CREATE_HIDDEN_LAYER, 4, 0);

imsls_f_mlff_network(network, IMSLS_CREATE_HIDDEN_LAYER, 3, 0);

/* Link input attributes to first hidden layer */

imsls_f_mlff_network(network, IMSLS_LINK_NODE,6,0, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,7,0, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,6,1, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,7,1, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,8,1, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,7,2, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,8,2, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,9,3, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,9,4, 0);

imsls_f_mlff_network(network, IMSLS_LINK_NODE,9,5, 0);

/* Link hidden layer 1 to hidden layer 2 then remove unwanted links */

imsls_f_mlff_network(network, IMSLS_LINK_LAYER,2,1, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,11,8, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,12,9, 0);

/* Link hidden layer 2 to output layer then remove unwanted link */

imsls_f_mlff_network(network, IMSLS_LINK_LAYER,3,2, 0);

imsls_f_mlff_network(network, IMSLS_REMOVE_LINK,14,10, 0);

imsls_f_mlff_network_free(network);

}