- java.lang.Object

-

- com.imsl.datamining.PredictiveModel

-

- com.imsl.datamining.decisionTree.DecisionTree

-

- com.imsl.datamining.decisionTree.DecisionTreeInfoGain

-

- com.imsl.datamining.decisionTree.ALACART

-

- All Implemented Interfaces:

- DecisionTreeSurrogateMethod, Serializable, Cloneable

public class ALACART extends DecisionTreeInfoGain implements DecisionTreeSurrogateMethod, Serializable, Cloneable

Generates a decision tree using the CARTTM method of Breiman, Friedman, Olshen and Stone (1984). CARTTM stands for Classification and Regression Trees and applies to categorical or quantitative type variables.

Only binary splits are considered for categorical variables. That is, if X has values {A, B, C, D}, splits into only two subsets are considered, e.g., {A} and {B, C, D}, or {A, B} and {C, D}, are allowed, but a three-way split defined by {A}, {B} and {C,D} is not.

For classification problems,

ALACARTuses a similar criterion to information gain called impurity. The method searches for a split that reduces the node impurity the most. For a given set of data S at a node, the node impurity for a C-class categorical response is a function of the class probabilities.

The measure function

should be 0 for "pure"

nodes, where all Y are in the same class, and maximum when Y is

uniformly distributed across the classes.

should be 0 for "pure"

nodes, where all Y are in the same class, and maximum when Y is

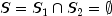

uniformly distributed across the classes.As only binary splits of a subset S are considered (S1, S2 such that

and

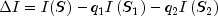

and  ), the reduction

in impurity when splitting S into S1,

S2 is

), the reduction

in impurity when splitting S into S1,

S2 is

where

is the node probability.![q_j = Pr[S_j], j = 1, 2](eqn_0264.png)

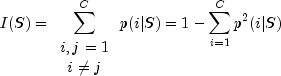

The gain criteria and the reduction in impurity

are similar concepts and equivalent when I is entropy and when only

binary splits are considered. Another popular measure for the impurity at a

node is the Gini index, given by

are similar concepts and equivalent when I is entropy and when only

binary splits are considered. Another popular measure for the impurity at a

node is the Gini index, given by

If Y is an ordered response or continuous, the problem is a regression problem.

ALACARTgenerates the tree using the same steps, except that node-level measures or loss-functions are the mean squared error (MSE) or mean absolute error (MAD) rather than node impurity measures.Missing Values

Any observation or case with a missing response variable is eliminated from the analysis. If a predictor has a missing value, each algorithm will skip that case when evaluating the given predictor. When making a prediction for a new case, if the split variable is missing, the prediction function applies surrogate split-variables and splitting rules in turn, if they are estimated with the decision tree. Otherwise, the prediction function returns the prediction from the most recent non-terminal node. In this implementation, only

ALACARTestimates surrogate split variables when requested.- See Also:

- Example 1, Serialized Form

-

-

Nested Class Summary

-

Nested classes/interfaces inherited from class com.imsl.datamining.decisionTree.DecisionTreeInfoGain

DecisionTreeInfoGain.GainCriteria

-

Nested classes/interfaces inherited from class com.imsl.datamining.decisionTree.DecisionTree

DecisionTree.MaxTreeSizeExceededException, DecisionTree.PruningFailedToConvergeException, DecisionTree.PureNodeException

-

Nested classes/interfaces inherited from class com.imsl.datamining.PredictiveModel

PredictiveModel.PredictiveModelException, PredictiveModel.StateChangeException, PredictiveModel.SumOfProbabilitiesNotOneException, PredictiveModel.VariableType

-

-

Constructor Summary

Constructors Constructor and Description ALACART(double[][] xy, int responseColumnIndex, PredictiveModel.VariableType[] varType)Constructs anALACARTdecision tree for a single response variable and multiple predictor variables.

-

Method Summary

Methods Modifier and Type Method and Description voidaddSurrogates(Tree tree, double[] surrogateInfo)Adds the surrogate information to the tree.intgetNumberOfSurrogateSplits()Returns the number of surrogate splits.double[]getSurrogateInfo()Returns the surrogate split information.protected intselectSplitVariable(double[][] xy, double[] classCounts, double[] parentFreq, double[] splitValue, int[] splitPartition)Selects the split variable for the present node using the CARTTM method.voidsetNumberOfSurrogateSplits(int nSplits)Sets the number of surrogate splits.-

Methods inherited from class com.imsl.datamining.decisionTree.DecisionTreeInfoGain

information, setGainCriteria, setUseRatio, useGainRatio

-

Methods inherited from class com.imsl.datamining.decisionTree.DecisionTree

fitModel, getCostComplexityValues, getDecisionTree, getFittedMeanSquaredError, getMaxDepth, getMaxNodes, getMeanSquaredPredictionError, getMinObsPerChildNode, getMinObsPerNode, getNumberOfComplexityValues, getNumberOfSets, isAutoPruningFlag, predict, predict, predict, printDecisionTree, printDecisionTree, pruneTree, setAutoPruningFlag, setConfiguration, setCostComplexityValues, setMaxDepth, setMaxNodes, setMinCostComplexityValue, setMinObsPerChildNode, setMinObsPerNode

-

Methods inherited from class com.imsl.datamining.PredictiveModel

getClassCounts, getCostMatrix, getMaxNumberOfCategories, getNumberOfClasses, getNumberOfColumns, getNumberOfMissing, getNumberOfPredictors, getNumberOfRows, getNumberOfUniquePredictorValues, getPredictorIndexes, getPredictorTypes, getPrintLevel, getPriorProbabilities, getResponseColumnIndex, getResponseVariableAverage, getResponseVariableMostFrequentClass, getResponseVariableType, getTotalWeight, getVariableType, getWeights, getXY, isMustFitModelFlag, isUserFixedNClasses, setClassCounts, setCostMatrix, setMaxNumberOfCategories, setNumberOfClasses, setPredictorIndex, setPredictorTypes, setPrintLevel, setPriorProbabilities, setWeights

-

-

-

-

Constructor Detail

-

ALACART

public ALACART(double[][] xy, int responseColumnIndex, PredictiveModel.VariableType[] varType)Constructs anALACARTdecision tree for a single response variable and multiple predictor variables.- Parameters:

xy- adoublematrix with rows containing the observations on the predictor variables and one response variable.responseColumnIndex- anintspecifying the column index of the response variable.varType- aPredictiveModel.VariableTypearray containing the type of each variable.

-

-

Method Detail

-

addSurrogates

public void addSurrogates(Tree tree, double[] surrogateInfo)

Adds the surrogate information to the tree.- Specified by:

addSurrogatesin interfaceDecisionTreeSurrogateMethod- Parameters:

tree- aTreecontaining the decision tree structure.surrogateInfo- adoublearray containing the surrogate split information.

-

getNumberOfSurrogateSplits

public int getNumberOfSurrogateSplits()

Returns the number of surrogate splits.- Specified by:

getNumberOfSurrogateSplitsin interfaceDecisionTreeSurrogateMethod- Returns:

- an

intspecifying the number of surrogate splits.

-

getSurrogateInfo

public double[] getSurrogateInfo()

Returns the surrogate split information.- Specified by:

getSurrogateInfoin interfaceDecisionTreeSurrogateMethod- Returns:

- a

double[]containing the surrogate split information.

-

selectSplitVariable

protected int selectSplitVariable(double[][] xy, double[] classCounts, double[] parentFreq, double[] splitValue, int[] splitPartition)Selects the split variable for the present node using the CARTTM method.- Specified by:

selectSplitVariablein classDecisionTreeInfoGain- Parameters:

xy- adoublematrix containing the data.classCounts- adoublearray containing the counts for each class of the response variable, when it is categorical.parentFreq- adoublearray used to determine the subset of the observations that belong to the current node.splitValue- adoublearray representing the resulting split point if the selected variable is quantitative.splitPartition- anintarray indicating the resulting split partition if the selected variable is categorical.- Returns:

- an

intspecifying the column index of the split variable inxy.

-

setNumberOfSurrogateSplits

public void setNumberOfSurrogateSplits(int nSplits)

Sets the number of surrogate splits.- Specified by:

setNumberOfSurrogateSplitsin interfaceDecisionTreeSurrogateMethod- Parameters:

nSplits- anintspecifying the number of predictors to consider as surrogate splitting variables.Default:

nSplits= 0.

-

-