- java.lang.Object

-

- com.imsl.datamining.PredictiveModel

-

- com.imsl.datamining.decisionTree.DecisionTree

-

- All Implemented Interfaces:

- Serializable, Cloneable

- Direct Known Subclasses:

- CHAID, DecisionTreeInfoGain, QUEST

public abstract class DecisionTree extends PredictiveModel implements Serializable, Cloneable

Abstract class for generating a decision tree for a single response variable and one or more predictor variables.

Tree Generation Methods

This package contains four of the most widely used algorithms for decision trees (

C45,ALACART,CHAID, andQUEST). The user may also provide an alternate algorithm by extending theDecisionTreeorDecisionTreeInfoGainabstract class and implementing the abstract methodselectSplitVariable( double[][], double[], double[], double[], int[]).Optimal Tree Size

Minimum Cost-complexity Pruning

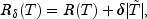

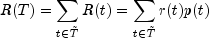

A strategy to address overfitting is to grow the tree as large as possible, and then use some logic to prune it back. Let T represent a decision tree generated by any of the methods above. The idea (from Breiman, et. al.) is to find the smallest sub-tree of T that minimizes the cost-complexity measure:

denotes the set of terminal nodes.

denotes the set of terminal nodes.

represents the number of terminal nodes, and

represents the number of terminal nodes, and

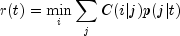

is a cost-complexity parameter. For a

categorical target variable

is a cost-complexity parameter. For a

categorical target variable

![p(t)=mbox{Pr}[xin t]](eqn_0233.png)

![mbox{and};p(j|t)=mbox{Pr}[y=j|xin t]mbox{,}](eqn_0234.png)

and

is the cost for misclassifying the actual

class j as i. Note that

is the cost for misclassifying the actual

class j as i. Note that  and

and

, for

, for  .

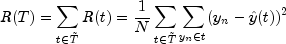

.When the target is continuous (and the problem is a regression problem), the metric is instead the mean squared error

This class implements the optimal pruning algorithm 10.1, page 294 in Breiman, et. al (1984). The result of the algorithm is a sequence of sub-trees

obtained by pruning the fully generated tree,

obtained by pruning the fully generated tree,

, until the sub-tree consists of the single

root node,

, until the sub-tree consists of the single

root node,  . Corresponding to the sequence of

sub-trees is the sequence of complexity values,

. Corresponding to the sequence of

sub-trees is the sequence of complexity values,  where M is the number of steps it takes in the algorithm

to reach the root node. The sub-trees represent the optimally-pruned

sub-trees for the sequence of complexity values. The minimum complexity

where M is the number of steps it takes in the algorithm

to reach the root node. The sub-trees represent the optimally-pruned

sub-trees for the sequence of complexity values. The minimum complexity

can be set via an optional argument.

can be set via an optional argument. V-Fold Cross-Validation

The

CrossValidationclass can be used for model validation.Prediction

Bagging

The

BootstrapAggregationclass provides predictions through a resampling scheme.Missing Values

Any observation or case with a missing response variable is eliminated from the analysis. If a predictor has a missing value, each algorithm will skip that case when evaluating the given predictor. When making a prediction for a new case, if the split variable is missing, the prediction function applies surrogate split-variables and splitting rules in turn, if they are estimated with the decision tree. Otherwise, the prediction function returns the prediction from the most recent non-terminal node. In this implementation, only

ALACARTestimates surrogate split variables when requested.- See Also:

- Example 1, Example 2, Example 3, Example 4, Serialized Form

-

-

Nested Class Summary

Nested Classes Modifier and Type Class and Description static classDecisionTree.MaxTreeSizeExceededExceptionException thrown when the maximum tree size has been exceeded.static classDecisionTree.PruningFailedToConvergeExceptionException thrown when pruning fails to converge.static classDecisionTree.PureNodeExceptionException thrown when attempting to split a node that is already pure (response variable is constant).-

Nested classes/interfaces inherited from class com.imsl.datamining.PredictiveModel

PredictiveModel.PredictiveModelException, PredictiveModel.StateChangeException, PredictiveModel.SumOfProbabilitiesNotOneException, PredictiveModel.VariableType

-

-

Constructor Summary

Constructors Constructor and Description DecisionTree(double[][] xy, int responseColumnIndex, PredictiveModel.VariableType[] varType)Constructs aDecisionTreeobject for a single response variable and multiple predictor variables.

-

Method Summary

Methods Modifier and Type Method and Description voidfitModel()Fits the decision tree.double[]getCostComplexityValues()Returns an array containing cost-complexity values.TreegetDecisionTree()Returns aTreeobject.doublegetFittedMeanSquaredError()Returns the mean squared error on the training data.intgetMaxDepth()Returns the maximum depth a tree is allowed to have.intgetMaxNodes()Returns the maximum number ofTreeNodeinstances allowed in a tree.doublegetMeanSquaredPredictionError()Returns the mean squared error.intgetMinObsPerChildNode()Returns the minimum number of observations that are required for any child node before performing a split.intgetMinObsPerNode()Returns the minimum number of observations that are required in a node before performing a split.intgetNumberOfComplexityValues()Return the number of cost complexity values determined.protected intgetNumberOfSets(double[] parentFreqs, int[] splita)Returns the number of sets for a split.booleanisAutoPruningFlag()Returns the auto-pruning flag.double[]predict()Predicts the training examples (in-sample predictions) using the most recently grown tree.double[]predict(double[][] testData)Predicts new data using the most recently grown decision tree.double[]predict(double[][] testData, double[] testDataWeights)Predicts new weighted data using the most recently grown decision tree.voidprintDecisionTree(boolean printMaxTree)Prints the contents of the Decision Tree using distinct but general labels.voidprintDecisionTree(String responseName, String[] predictorNames, String[] classNames, String[] categoryNames, boolean printMaxTree)Prints the contents of the Decision Tree.voidpruneTree(double gamma)Finds the minimum cost-complexity decision tree for the cost-complexity value, gamma.protected abstract intselectSplitVariable(double[][] xy, double[] classCounts, double[] parentFreq, double[] splitValue, int[] splitPartition)Abstract method for selecting the next split variable and split definition for the node.voidsetAutoPruningFlag(boolean autoPruningFlag)Sets the flag to automatically prune the tree during the fitting procedure.protected voidsetConfiguration(PredictiveModel pm)Sets the configuration ofPredictiveModelto that of the input model.voidsetCostComplexityValues(double[] gammas)Sets the cost-complexity values.voidsetMaxDepth(int nLevels)Specifies the maximum tree depth allowed.voidsetMaxNodes(int maxNodes)Sets the maximum number of nodes allowed in a tree.voidsetMinCostComplexityValue(double minCostComplexity)Sets the value of the minimum cost-complexity value.voidsetMinObsPerChildNode(int nObs)Specifies the minimum number of observations that a child node must have in order to split, one of several tree size and splitting control parameters.voidsetMinObsPerNode(int nObs)Specifies the minimum number of observations a node must have to allow a split, one of several tree size and splitting control parameters.-

Methods inherited from class com.imsl.datamining.PredictiveModel

getClassCounts, getCostMatrix, getMaxNumberOfCategories, getNumberOfClasses, getNumberOfColumns, getNumberOfMissing, getNumberOfPredictors, getNumberOfRows, getNumberOfUniquePredictorValues, getPredictorIndexes, getPredictorTypes, getPrintLevel, getPriorProbabilities, getResponseColumnIndex, getResponseVariableAverage, getResponseVariableMostFrequentClass, getResponseVariableType, getTotalWeight, getVariableType, getWeights, getXY, isMustFitModelFlag, isUserFixedNClasses, setClassCounts, setCostMatrix, setMaxNumberOfCategories, setNumberOfClasses, setPredictorIndex, setPredictorTypes, setPrintLevel, setPriorProbabilities, setWeights

-

-

-

-

Constructor Detail

-

DecisionTree

public DecisionTree(double[][] xy, int responseColumnIndex, PredictiveModel.VariableType[] varType)Constructs aDecisionTreeobject for a single response variable and multiple predictor variables.- Parameters:

xy- adoublematrix with rows containing the observations on the predictor variables and one response variable.responseColumnIndex- anintspecifying the column index of the response variable.varType- aPredictiveModel.VariableTypearray containing the type of each variable.

-

-

Method Detail

-

fitModel

public void fitModel() throws PredictiveModel.PredictiveModelException, DecisionTree.PruningFailedToConvergeException, PredictiveModel.StateChangeException, DecisionTree.PureNodeException, PredictiveModel.SumOfProbabilitiesNotOneException, DecisionTree.MaxTreeSizeExceededExceptionFits the decision tree. Implements the abstract method.- Overrides:

fitModelin classPredictiveModel- Throws:

PredictiveModel.PredictiveModelException- an exception has occurred in the com.imsl.datamining.PredictiveModel. Superclass exceptions should be considered such as com.imsl.datamining.PredictiveModel.StateChangeException and com.imsl.datamining.PredictiveModel.SumOfProbabilitiesNotOneException}.DecisionTree.PruningFailedToConvergeException- pruning has failed to converge.PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.DecisionTree.PureNodeException- attempting to split a node that is already pure.PredictiveModel.SumOfProbabilitiesNotOneException- the sum of probabilities is not approximately one.DecisionTree.MaxTreeSizeExceededException- the maximum tree size has been exceeded.

-

getCostComplexityValues

public double[] getCostComplexityValues() throws DecisionTree.PruningFailedToConvergeException, PredictiveModel.StateChangeExceptionReturns an array containing cost-complexity values.- Returns:

- a

doublearray containing the cost-complexity values.The cost-complexity values are found via the optimal pruning algorithm of Breiman, et. al.

- Throws:

DecisionTree.PruningFailedToConvergeException- pruning has failed to converge.PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.

-

getDecisionTree

public Tree getDecisionTree() throws PredictiveModel.StateChangeException

Returns aTreeobject.- Returns:

- a

Treeobject containing the tree structure information. - Throws:

PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.

-

getFittedMeanSquaredError

public double getFittedMeanSquaredError() throws PredictiveModel.StateChangeExceptionReturns the mean squared error on the training data.- Returns:

- a

doubleequal to the mean squared error between the fitted value and the actual value of the response variable in the training data. - Throws:

PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.

-

getMaxDepth

public int getMaxDepth()

Returns the maximum depth a tree is allowed to have.- Returns:

- an

intindicating the maximum depth a tree is allowed to have.

-

getMaxNodes

public int getMaxNodes()

Returns the maximum number ofTreeNodeinstances allowed in a tree.- Returns:

- an

intindicating the maximum number of nodes allowed in a tree.

-

getMeanSquaredPredictionError

public double getMeanSquaredPredictionError() throws PredictiveModel.StateChangeExceptionReturns the mean squared error.- Returns:

- a

doubleequal to the mean squared error between the predicted value and the actual value of the response variable. The error is the in-sample fitted error ifpredictis first called with no arguments. Otherwise, the error is relative to the test data provided in the call topredict. - Throws:

PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.

-

getMinObsPerChildNode

public int getMinObsPerChildNode()

Returns the minimum number of observations that are required for any child node before performing a split.- Returns:

- an

intindicating the minimum number of observations that are required for any child node before performing a split.

-

getMinObsPerNode

public int getMinObsPerNode()

Returns the minimum number of observations that are required in a node before performing a split.- Returns:

- an

intindicating the minimum number of observations that are required in a node before performing a split.

-

getNumberOfComplexityValues

public int getNumberOfComplexityValues()

Return the number of cost complexity values determined.- Returns:

- an

intindicating the number of cost complexity values determined.

-

getNumberOfSets

protected int getNumberOfSets(double[] parentFreqs, int[] splita)Returns the number of sets for a split.- Parameters:

parentFreqs- adoublearray containing frequencies of the response variable in the data subset of the parent node.splita- anintarray that contains the split partition determined in theselectSplitVariable(double[][], double[], double[], double[], int[])method.- Returns:

- an

intthat is the number of sets.

-

isAutoPruningFlag

public boolean isAutoPruningFlag()

Returns the auto-pruning flag. See descriptionsetAutoPruningFlag(boolean)for details.- Returns:

- a

booleanwhich iftruemeans that the model is configured to perform automatic pruning.

-

predict

public double[] predict() throws PredictiveModel.StateChangeExceptionPredicts the training examples (in-sample predictions) using the most recently grown tree.- Specified by:

predictin classPredictiveModel- Returns:

- a

doublearray of fitted values of the response variable using the most recently grown decision tree. To populate fitted values, use thepredictmethod without arguments. - Throws:

PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.

-

predict

public double[] predict(double[][] testData) throws PredictiveModel.StateChangeExceptionPredicts new data using the most recently grown decision tree.- Specified by:

predictin classPredictiveModel- Parameters:

testData- adoublematrix containing test data for which predictions are to be made using the current tree.testDatamust have the same number of columns and the columns must be in the same arrangement asxy.- Returns:

- a

doublearray of predicted values of the response variable using the most recently grown decision tree. - Throws:

PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.

-

predict

public double[] predict(double[][] testData, double[] testDataWeights) throws PredictiveModel.StateChangeExceptionPredicts new weighted data using the most recently grown decision tree.- Specified by:

predictin classPredictiveModel- Parameters:

testData- adoublematrix containing test data for which predictions are to be made using the current tree.testDatamust have the same number of columns and the columns must be in the same arrangement asxy.testDataWeights- adoublearray containing weights for each row oftestData.- Returns:

- a

doublearray of predicted values of the response variable using the most recently grown decision tree. - Throws:

PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.

-

printDecisionTree

public void printDecisionTree(boolean printMaxTree)

Prints the contents of the Decision Tree using distinct but general labels.This method uses default values for the variable labels when printing (see

printDecisionTree(String, String[], String[], String[], boolean) for these values.)- Parameters:

printMaxTree- abooleanindicating that the maximal tree should be printed.Otherwise the pruned tree is printed.

-

printDecisionTree

public void printDecisionTree(String responseName, String[] predictorNames, String[] classNames, String[] categoryNames, boolean printMaxTree)

Prints the contents of the Decision Tree.- Parameters:

responseName- aStringspecifying a name for the response variable.If

null, the default value is used.Default:

responseName= YpredictorNames- aStringarray specifying names for the response variables.If

null, the default value is used.Default:

predictorNames= X0, X1, ...classNames- aStringarray specifying names for the classes.If

null, the default value is used.Default:

classNames= 0, 1, 2, ...categoryNames- aStringarray specifying names for the categories.If

null, the default value is used.Default:

categoryNames= 0, 1, 2, ...printMaxTree- abooleanindicating that the maximal tree should be printed.Otherwise the pruned tree is printed.

-

pruneTree

public void pruneTree(double gamma)

Finds the minimum cost-complexity decision tree for the cost-complexity value, gamma.The method implements the optimal pruning algorithm 10.1, page 294 in Breiman, et. al (1984). The result of the algorithm is a sequence of sub-trees

The effect of the pruning is stored in the tree's terminal node array. That is, when the algorithm determines that the tree should be pruned at a particular node, it sets that node to be a terminal node using the method obtained by pruning the fully generated tree,

obtained by pruning the fully generated tree,

, until the sub-tree consists of the single

root node,

, until the sub-tree consists of the single

root node,  . Corresponding to the sequence of

sub-trees is the sequence of complexity values,

. Corresponding to the sequence of

sub-trees is the sequence of complexity values,  where M is the number of steps it takes in the

algorithm to reach the root node. The sub-trees represent the optimally

pruned sub-trees for the sequence of complexity values.

where M is the number of steps it takes in the

algorithm to reach the root node. The sub-trees represent the optimally

pruned sub-trees for the sequence of complexity values.

Tree.setTerminalNode(int, boolean). No other changes are made to the tree structure so that the maximal tree can still be printed and reviewed. However, once a tree is pruned, all the predictions will use the pruned tree.- Parameters:

gamma- adoubleequal to the cost-complexity parameter.

-

selectSplitVariable

protected abstract int selectSplitVariable(double[][] xy, double[] classCounts, double[] parentFreq, double[] splitValue, int[] splitPartition)Abstract method for selecting the next split variable and split definition for the node.- Parameters:

xy- adoublematrix containing the data.classCounts- adoublearray containing the counts for each class of the response variable, when it is categorical.parentFreq- adoublearray used to indicate which subset of the observations belong in the current node.splitValue- adoublearray representing the resulting split point if the selected variable is quantitative.splitPartition- anintarray indicating the resulting split partition if the selected variable is categorical.- Returns:

- an

intspecifying the column index of the split variable inxy.

-

setAutoPruningFlag

public void setAutoPruningFlag(boolean autoPruningFlag)

Sets the flag to automatically prune the tree during the fitting procedure.The default value is

false. Set totruebefore callingfitModel()in order to prune the tree automatically. The pruning will use the cost-complexity value equal tominCostComplexityValue. See alsopruneTree(double)which prunes the tree using a given cost-complexity value.- Parameters:

autoPruningFlag- abooleanvalue that whentrueindicates that the maximally grown tree should be automatically pruned infitModel().Default:

autoPruningFlag=false.

-

setConfiguration

protected void setConfiguration(PredictiveModel pm) throws DecisionTree.PruningFailedToConvergeException, PredictiveModel.StateChangeException, PredictiveModel.SumOfProbabilitiesNotOneException

Sets the configuration ofPredictiveModelto that of the input model.- Specified by:

setConfigurationin classPredictiveModel- Parameters:

pm- aPredictiveModelobject which is to have its attributes duplicated in this instance.- Throws:

DecisionTree.PruningFailedToConvergeException- pruning has failed to converge.PredictiveModel.StateChangeException- an input parameter has changed that might affect the model estimates or predictions.PredictiveModel.SumOfProbabilitiesNotOneException- the sum of the probabilities does not equal 1.

-

setCostComplexityValues

public void setCostComplexityValues(double[] gammas)

Sets the cost-complexity values. For the original tree, the values are generated infitModel()whenisAutoPruningFlag()returnstrue.- Parameters:

gammas-doublearray containing cost-complexity values. This method is used when copying the configuration of one tree to another.Default:

gammas=setMinCostComplexityValue(double).

-

setMaxDepth

public void setMaxDepth(int nLevels)

Specifies the maximum tree depth allowed.- Parameters:

nLevels- anintspecifying the maximum depth that theDecisionTreeis allowed to have.nLevelsshould be strictly positive.Default:

nLevels= 10.

-

setMaxNodes

public void setMaxNodes(int maxNodes)

Sets the maximum number of nodes allowed in a tree.- Parameters:

maxNodes- anintspecifying the maximum number of nodes allowed in a tree.Default:

maxNodes= 100.

-

setMinCostComplexityValue

public void setMinCostComplexityValue(double minCostComplexity)

Sets the value of the minimum cost-complexity value.- Parameters:

minCostComplexity- adoubleindicating the smallest value to use in cost-complexity pruning. The value must be in [0.0, 1.0].Default:

minCostComplexity= 0.

-

setMinObsPerChildNode

public void setMinObsPerChildNode(int nObs)

Specifies the minimum number of observations that a child node must have in order to split, one of several tree size and splitting control parameters.- Parameters:

nObs- anintspecifying the minimum number of observations that a child node must have in order to split the current node.nObsmust be strictly positive.nObsmust also be greater than the minimum number of observations required before a node can splitsetMinObsPerNode(int).Default:

nObs= 7.

-

setMinObsPerNode

public void setMinObsPerNode(int nObs)

Specifies the minimum number of observations a node must have to allow a split, one of several tree size and splitting control parameters.- Parameters:

nObs- anintspecifying the number of observations the current node must have before considering a split.nObsshould be greater than 1 but less than or equal to the number of observations inxy.Default:

nObs= 21.

-

-