- java.lang.Object

-

- com.imsl.datamining.PredictiveModel

-

- com.imsl.datamining.decisionTree.DecisionTree

-

- com.imsl.datamining.decisionTree.DecisionTreeInfoGain

-

- All Implemented Interfaces:

- Serializable, Cloneable

public abstract class DecisionTreeInfoGain extends DecisionTree implements Serializable, Cloneable

Abstract class that extends

DecisionTreefor classes that use an information gain criteria.- See Also:

- Serialized Form

-

-

Nested Class Summary

Nested Classes Modifier and Type Class and Description static classDecisionTreeInfoGain.GainCriteriaSpecifies which information gain criteria to use in determining the best split at each node.-

Nested classes/interfaces inherited from class com.imsl.datamining.decisionTree.DecisionTree

DecisionTree.MaxTreeSizeExceededException, DecisionTree.PruningFailedToConvergeException, DecisionTree.PureNodeException

-

Nested classes/interfaces inherited from class com.imsl.datamining.PredictiveModel

PredictiveModel.PredictiveModelException, PredictiveModel.StateChangeException, PredictiveModel.SumOfProbabilitiesNotOneException, PredictiveModel.VariableType

-

-

Constructor Summary

Constructors Constructor and Description DecisionTreeInfoGain(double[][] xy, int responseColumnIndex, PredictiveModel.VariableType[] varType)Constructs aDecisionTreeobject for a single response variable and multiple predictor variables.

-

Method Summary

Methods Modifier and Type Method and Description protected doubleinformation(int[] x, int[] y, double[] classCounts, double[] weights, boolean xInfo)Returns the expected information of a variableyover a partition determined by the variablex.protected abstract intselectSplitVariable(double[][] xy, double[] classCounts, double[] parentFreq, double[] splitValue, int[] splitPartition)Abstract method for selecting the next split variable and split definition for the node.voidsetGainCriteria(DecisionTreeInfoGain.GainCriteria gainCriteria)Specifies which criteria to use in gain calculations in order to determine the best split at each node.voidsetUseRatio(boolean ratio)Sets the flag to use or not use the gain ratio instead of the gain to determine the best split.booleanuseGainRatio()Returns whether or not the gain ratio is to be used instead of the gain to determine the best split.-

Methods inherited from class com.imsl.datamining.decisionTree.DecisionTree

fitModel, getCostComplexityValues, getDecisionTree, getFittedMeanSquaredError, getMaxDepth, getMaxNodes, getMeanSquaredPredictionError, getMinObsPerChildNode, getMinObsPerNode, getNumberOfComplexityValues, getNumberOfSets, isAutoPruningFlag, predict, predict, predict, printDecisionTree, printDecisionTree, pruneTree, setAutoPruningFlag, setConfiguration, setCostComplexityValues, setMaxDepth, setMaxNodes, setMinCostComplexityValue, setMinObsPerChildNode, setMinObsPerNode

-

Methods inherited from class com.imsl.datamining.PredictiveModel

getClassCounts, getCostMatrix, getMaxNumberOfCategories, getNumberOfClasses, getNumberOfColumns, getNumberOfMissing, getNumberOfPredictors, getNumberOfRows, getNumberOfUniquePredictorValues, getPredictorIndexes, getPredictorTypes, getPrintLevel, getPriorProbabilities, getResponseColumnIndex, getResponseVariableAverage, getResponseVariableMostFrequentClass, getResponseVariableType, getTotalWeight, getVariableType, getWeights, getXY, isMustFitModelFlag, isUserFixedNClasses, setClassCounts, setCostMatrix, setMaxNumberOfCategories, setNumberOfClasses, setPredictorIndex, setPredictorTypes, setPrintLevel, setPriorProbabilities, setWeights

-

-

-

-

Constructor Detail

-

DecisionTreeInfoGain

public DecisionTreeInfoGain(double[][] xy, int responseColumnIndex, PredictiveModel.VariableType[] varType)Constructs aDecisionTreeobject for a single response variable and multiple predictor variables.- Parameters:

xy- adoublematrix with rows containing the observations on the predictor variables and one response variable.responseColumnIndex- anintspecifying the column index of the response variable.varType- aPredictiveModel.VariableTypearray containing the type of each variable.

-

-

Method Detail

-

information

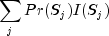

protected double information(int[] x, int[] y, double[] classCounts, double[] weights, boolean xInfo)Returns the expected information of a variableyover a partition determined by the variablex.Given a data subset

containing both variables

containing both variables

and

and  , let

, let

be a partition of

determined by the values in

determined by the values in

. Then the expected information is

. Then the expected information is

where

is either the Shannon entropy or the Gini

index, according to

is either the Shannon entropy or the Gini

index, according to DecisionTreeInfoGain.GainCriteria. Note: if is constant, the return value is the

Shannon Entropy (or Gini index) of Y.

is constant, the return value is the

Shannon Entropy (or Gini index) of Y.

- Parameters:

x- anintarray of lengthxy.lengthcontaining values of a predictor or an indicator vector defining the partition of the observations.y-intarray of lengthxy.lengthcontaining the values of the response variable.classCounts- adoublearray containing the counts for each class of the response variable, when it is categorical.weights- adoublearray used to indicate which subset of the observations belong in the current node.xInfo- abooleanindicating that we are getting information aboutxusing a simple frequency estimate.Value Method truesimple frequency estimate falseprior probabilities - Returns:

- a

doubleindicating the information uncertainty.

-

selectSplitVariable

protected abstract int selectSplitVariable(double[][] xy, double[] classCounts, double[] parentFreq, double[] splitValue, int[] splitPartition)Abstract method for selecting the next split variable and split definition for the node.- Specified by:

selectSplitVariablein classDecisionTree- Parameters:

xy- adoublematrix containing the data.classCounts- adoublearray containing the counts for each class of the response variable, when it is categorical.parentFreq- adoublearray used to indicate which subset of the observations belong in the current node.splitValue- adoublearray representing the resulting split point if the selected variable is quantitative.splitPartition- anintarray indicating the resulting split partition if the selected variable is categorical.- Returns:

- an

intspecifying the column index of the split variable inxy.

-

setGainCriteria

public void setGainCriteria(DecisionTreeInfoGain.GainCriteria gainCriteria)

Specifies which criteria to use in gain calculations in order to determine the best split at each node.- Parameters:

gainCriteria- aDecisionTreeInfoGain.GainCriteriaspecifying which criteria to use in gain calculations in order to determine the best split at each node.Default:

gainCriteria=DecisionTreeInfoGain.GainCriteria.SHANNON_ENTROPY

-

setUseRatio

public void setUseRatio(boolean ratio)

Sets the flag to use or not use the gain ratio instead of the gain to determine the best split.- Parameters:

ratio- a boolean indicating if the gain ratio is to be used.trueresults in the gain ratio being used andfalseindicates the gain is to be used.Default:

useRatio=false

-

useGainRatio

public boolean useGainRatio()

Returns whether or not the gain ratio is to be used instead of the gain to determine the best split.- Returns:

- a

booleanindicating if the gain ratio is to be used.trueresults in the gain ratio being used andfalseindicates the gain is to be used.

-

-