Usage Notes

The functions in this chapter assume the time series does not contain any missing values. If missing values are present, they should be set to NaN (see Chapter 15, “Utilities”) function imsls_f_machine), and the function will return an appropriate error message. To enable fitting of the model, the missing values must be replaced by appropriate estimates.

Model Construction and Evaluation Utilties

A major component of the model identification step concerns determining if a given time series is stationary. The sample correlation functions computed by functions imsls_f_autocorrelation, imsls_f_crosscorrelation, imsls_f_multi_crosscorrelation, and imsls_f_partial_autocorrelation may be used to diagnose the presence of nonstationarity in the data, as well as to indicate the type of transformation required to induce stationarity. The family of power transformations provided by function imsls_f_box_cox_transform coupled with the ability to difference the transformed data using function imsls_f_difference affords a convenient method of transforming a wide class of nonstationary time series to stationarity.

The “raw” data, transformed data, and sample correlation functions also provide insight into the nature of the underlying model. Typically, this information is displayed in graphical form via time series plots, plots of the lagged data, and various correlation function plots.

The observed time series may also be compared with time series generated from various theoretical models to help identify possible candidates for model fitting. The function imsls_f_random_arma (see Chapter 12, “Random Number Generation”) may be used to generate a time series according to a specified autoregressive moving average model.

ARIMA Models

Once the data are transformed to stationarity, a tentative model in the time domain is often proposed and parameter estimation, diagnostic checking and forecasting are performed.

ARIMA Model (Autoregressive Integrated Moving Average)

A small, yet comprehensive, class of stationary time-series models consists of the nonseasonal ARMA processes defined by

φ(B) (Wt − μ) = θ(B)At, t ∈ Z

where Z = {..., −2, −1, 0, 1, 2, ...} denotes the set of integers, B is the backward shift operator defined by BkWt = Wt-k, μ is the mean of Wt, and the following equations are true:

φ(B) = 1 − φ1B − φ2B2 − ... − φpBp, p ≥ 0

θ(B) = 1 − θ1B − θ2B2 − ... − θqBq, q ≥ 0

The model is of order (p, q) and is referred to as an ARMA (p, q) model.

An equivalent version of the ARMA (p, q) model is given by

φ(B) Wt = θ0 + θ(B)At, t ∈ Z

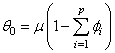

where θ0 is an overall constant defined by the following:

See Box and Jenkins (1976, pp. 92−93) for a discussion of the meaning and usefulness of the overall constant.

If the “raw” data, {Zt}, are homogeneous and nonstationary, then differencing using imsls_f_difference induces stationarity, and the model is called ARIMA (AutoRegressive Integrated Moving Average). Parameter estimation is performed on the stationary time series Wt = ∇dZt , where ∇d = (1 − B)d is the backward difference operator with period 1 and order d, d > 0.

Typically, the method of moments includes argument IMSLS_METHOD_OF_MOMENTS in a call to function imsls_f_arma for preliminary parameter estimates. These estimates can be used as initial values into the least-squares procedure by including argument IMSLS_LEAST_SQUARES in a call to function imsls_f_arma. Other initial estimates provided by the user can be used. The least-squares procedure can be used to compute conditional or unconditional least-squares estimates of the parameters, depending on the choice of the backcasting length. The parameter estimates from either the method of moments or least-squares procedures can be input to function imsls_f_arma_forecast through the arma_info structure. The functions for preliminary parameter estimation, least-squares parameter estimation, and forecasting follow the approach of Box and Jenkins (1976, Programs 2−4, pp. 498−509).

Regression in Autoregressive Integrated Moving Average

There may be one or more external time series that relate

to the time series of interest, which may be useful in improving

forecasts. Function imsls_f_regression_arima allows for

the inclusion of one or more regression time series in the above ARIMA

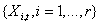

model. That is, if there are r time series  associated with a times series

Yt, the regression ARIMA

model (integrated of order d ) is

associated with a times series

Yt, the regression ARIMA

model (integrated of order d ) is

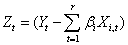

where

.

.

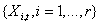

That is, Zt is the residual

(indexed by t) of the regression of Yt on  .

.

Automatic ARIMA Selection and Fitting Utilities

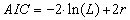

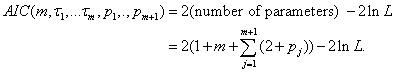

A popular criterion for comparing autoregressive-moving average (ARMA) models with different lags is a measure known as Akaike’s Information Criterion (AIC). The AIC for an ARMA univariate series is calculated by:

,

,

where L = the value for the maximum likelihood function for the fitted model, and r = p + q + 1, the number of parameters in the ARMA model. To use the criterion, several choices for p and q are fit to a time series and the fit with the smallest AIC is considered best. The function, imsls_f_auto_uni_ar uses the AIC criterion to select a best fitting AR model. The function imsls_f_auto_arima performs a more comprehensive search, considering not only the ARMA parameters, but also the appropriate Box-Cox transformation, degree of differencing and seasonal adjustment, and also filters the data for outliers by calling imsls_f_ts_outlier_identification.

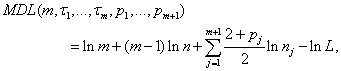

The function imsls_f_auto_parm uses a second criterion, called “Minimum Description Length” or MDL, to automatically fit piecewise AR models to a time series with structural breaks (i.e., a potentially non-stationary time series having stationary segments).

The MDL is defined as

where m is the number of structural breaks in the

series,  are the locations of the breaks,

nj is the number of

observations in the j-th segment,

pj is the order of the AR model

fit to the j-th segment, and

L is the combined likelihood over all segments. imsls_f_auto_parm

also allows the choice to use the AIC criterion,

are the locations of the breaks,

nj is the number of

observations in the j-th segment,

pj is the order of the AR model

fit to the j-th segment, and

L is the combined likelihood over all segments. imsls_f_auto_parm

also allows the choice to use the AIC criterion,

Exponential Smoothing Methods

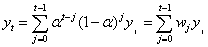

Exponential smoothing approximates the value of a time series at time t with a weighted average of previous values, with weights defined in such a way that they decay exponentially over time.

The weights can be determined by a smoothing parameter α and the relation,

The parameter α is on the interval (0,1) and controls the rate of decay. For values close to 1, the effect decays rapidly, whereas for values close to 0, the influence of a past value persists for some time. Exponential smoothing as a forecasting procedure is widely used and largely effective for short term, mean level forecasting. With the addition of a term for linear trend and terms or factors for seasonal patterns, exponential smoothing is an intuitive procedure for many series that arise in business applications. The function imsls_f_hw_time_series performs the Holt-Winters method, otherwise known as triple exponential smoothing, and allows for either an additive or a multiplicative seasonal effect.

Garch Models

An important assumption in the ARMA model

φ(B) Wt = θ0 + θ(B)At, t ∈ Z

is that the errors At are independent random variables with mean 0 and constant variance, σ2.

For some time series, the assumptions of independent errors and constant variance will not hold for the underlying series. For example, in the stock market, large errors in one direction are often followed by large errors in the opposite direction. Variability over time can increase with a price level or trading volume. To account for heteroscedastic (non-equal) variances, Engle (1982) introduced the Autoregressive Conditional Heteroscedastic or ARCH, model:

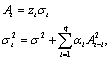

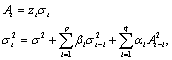

where the zt’s are independent and identically distributed standard normal random variables. In the ARCH model, the variance term depends on previous squared errors, up to a given lag q. A generalized ARCH model, called GARCH, was introduced by Bollerslev (1986) and has the form:

In the GARCH model, the variance has an auto-regressive term in addition to the squared error term. The function imsls_f_garch estimates ARCH or GARCH models.

State-Space Models

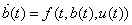

A state-space model is represented by two equations: the state equation

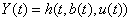

and the observation equation,

where b(t) is the state variable, Y(t) is the observation variable, u(t) is a vector of inputs, and

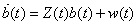

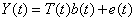

State-space models originated in the study of dynamical systems. The system state b(t) is not directly observed but is inferred from the observable variable, Y(t), through the relation defined by the function, h. Y(t) is sometimes called the measurement variable or output variable. While f and h are completely general functions in the definition, they are most often linear, based on the assumption that the underlying system behaves linearly or approximately so. There is often a stochastic or noise term added to the equations and, in the time series context, there are usually no control inputs (u(t) = 0). Under these conditions, the state-space model takes the form,

and

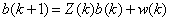

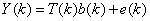

where Z and T are known matrices and w and e are noise variables. Time may evolve continuously or discretely. For a discrete time variable, it is customary to write the equations as:

and

where k = …-3, -2, -1, 0, 1, 2, 3, ….

Many time series can be expressed in the state-space form, including ARIMA models (See Section 5.5 in Box, Jenkins, and Reinsel (2008)). For ARIMA models, the state-space form is convenient for the calculation of the likelihood and forecasts, and for handling missing values. Once a time series is formulated as a state-space model, the problem becomes one of solving the equations for the unknown parameters. The Kalman filter (imsls_f_kalman) is a recursive method for solving the state-space equations when the functions f and h are linear.